Last month, I wrote two essays exploring the ideas of neuroscientist Paul Cisek, based at the University of Montreal, in relation to AI and cognitive science. He was kind enough to let me interview him—thanks Paul!—and now I’m happy to share our conversation with y’all. (You don’t need to have read the previous essays, but it wouldn’t hurt.)

Our conversation ran long, so if you’re inclined to skip around—we begin with AI, proceed to Cisek’s challenges to the cognitive model of the mind and his alternative hypothesis, and then return to AI again briefly.

I first became aware of you as the result of the magician metaphor you used to describe the strange way some scientists and AI enthusiasts are behaving when it comes to AI. Can you restate that for us?

My point is that with AI, we know what's under the hood. The way large-language models work is that they predict the next word, and they do by taking advantage of the statistics of language, of how words tend to follow one another. So LLMs do this and it's remarkable that it works so well and producing meaningful text. It’s amazing in many ways.

But this is where the magicians come in.

Professional magicians know what their tricks are. They know where all the little strings are and the hidden compartments and how to misdirect the audience. There’s no actual magic. Yet, the people who build LLMs, the people who know what’s under the hood, it’s as if they are professional magicians who do their tricks yet then go backstage and discuss with each other, “well maybe there really is magic?”

Which of course there isn’t. We know what they did to do the trick.

Let me play devil’s advocate by proxy. When the magician story was circulating on social media, Murray Shanahan, who is at Google DeepMind and is one my favorite AI thinkers, suggested perhaps we don’t *really* know what’s going on under the hood with either AI or humans. It’s very difficult to trace how LLMs produce particular responses to given prompts, and it’s all but impossible to trace the path of human thought.

So maybe we don’t know what’s happening under either hood?

Sure, we don’t know everything about the brain. But we know enough to reject certain hypotheses, and the idea that it’s like an LLM is among those we can reject outright.

I think you need to do experiments on it. You can do experiments to test the ability of an LLM to understand something. And it's very easy to get an LLM to give you a ridiculous answer. When I ask it something I really do know about in neuroscience, its answers aren't that great—because some of what I know is obscure, and not a big part of the LLM’s training set. And so they cannot make intelligent statements when they're at the edge of their distribution.

Now, some might say, let’s give an LLM a bunch of prompts and see how often it does well, and measure the percentage of the time it actually say something sensible—a performance analysis. It's gonna do well. And a lot of people in the AI world would consider that to be the measure of success. If the LLM produced responses that were right 99.6% of the time, that’s be very good, right?

But that doesn't work in science. Newton's theories of space and the motion of objects are 99.99% accurate. In fact, if you compared Newton’s theories to Einstein’s theories just based on a model comparison of what best fits the data, and penalize Einstein for having a few extra parameters, Newton will win hands down.

But when tested in that 0.01% case, where Newton’s theories actually make a different prediction than what Einstein’s relativity theories predict, Newton fails. The only case that matters is where the two theories diverge and make a different prediction. So when you do targeted experiments with LLMs, something that goes beyond what they’ve been specifically trained upon, they don’t do so well.

And those are the tests that matter most.

Ok, continuing to play devil’s advocate by proxy, what you’re describing sounds like what Francois Chollet has tried to do with the ARC-AGI test, which I’ve written about previously. Like you, he said that LLMs would struggle on tasks that they weren’t trained specifically to solve. Yet, the new “reasoning models” have shown a huge jump in performance on the abstract problems contained in the ARC test.

How do you respond to that?

I think that what's being done right now is kind of heading in the wrong direction. It’s a faith in data, and that by fitting the data, you achieve understanding.

That’s not how we learn, right? Alison Gopnik famously says that kids are scientists. They try stuff, and that's how they build their understanding. A kids pushes on something and it falls over and they learn, they learn about the world by interacting with it—and trying specific things, not just wildly flailing around. Because they're testing their knowledge, essentially like scientists do. That’s how humans learn.

And that's not what AI models are doing. They're passively absorbing patterns that have been produced by others. The humans who made those patterns, they interacted with the world, they had an understanding of the structure. But observing that structure by a passive system that just sort of absorbs it is not the same as understanding.

I don't think that that's what intelligence is, and I don't think the way to get there just by making the models bigger, you know? We've been down this road many times already in history, and I think people should be aware of that history (because you know what they say about history).

The role of language in cognition is one I think about a lot. I often think about Helen Keller, and that moment when she realized that the movement of fingers in her hand by her teacher, Anne Sullivan, represented the word for water. Keller said that once she understood language, the “living word awakened my soul, gave it light, hope, joy, set it free.”

How would you respond to AI enthusiasts who believe “artificial general intelligence” will emerge similarly?

I don't buy it. Helen Keller had impoverished sensory experience, but she was still continuously interacting with the world, and she understood that world non-linguistically long before that moment of insight. And so she had a foundation of semantics, a semantics of basic interactions with the world, that was not verbalized. Babies have this too before they learn how to talk.

Helen Keller interacted with the world, she was able to discover casual structure in the world, despite her sensory deprivation. She had the foundation that she could then tack words onto. And tacking the words on top of meaningful interactions is not a small thing, but it is not, on its own, sufficient to create meaning.

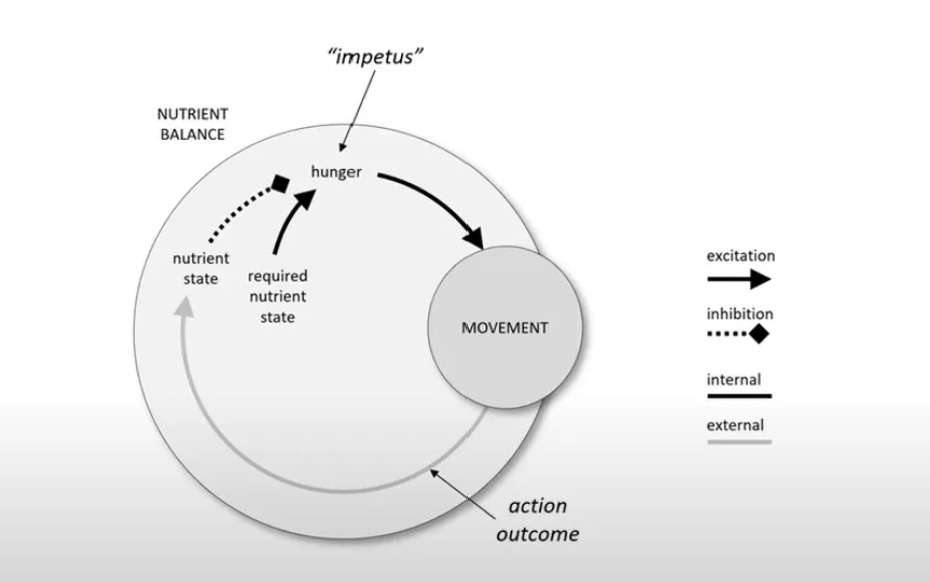

This takes us into cognitive science and neuroscience and away from AI. This is where perceptual control theory and ecological psychology and this stuff that I obsess about, it’s about a feedback-control view of behavior. Meaning has to do with what are the consequences of doing one thing versus doing another thing.

And this has been happening with animals for literally billions of years. It’s been going on in humans long before there’s language. Yes, language is something that we’re impressed by, and very dependent upon—but it’s still something that comes on top of our semantic foundation of meaning.

Of course people have been saying this for years. Yet, with all due respect to the AI world, they seem to have ignored that entirely. It’s bizarre, it’s like we’ve gone down this dead end already, but now we’re going to pursue the same dead end again, only bigger.

What do you mean by semantics in this context?

The meaning of words is the semantics of language. But I would say, forget the semantics of words for a moment. What is it to understand the world? In general, it’s to understand the meaning of things, right? And I think it’s better to first ask that question outside the context of language, and only bring language after you have a general idea about how we construct meaning from our experiences of the world.

And you have some ideas about how that happens. My interpreation of your ideas is that you see the brain as a tool for behavioral control, one that shapes the stimulus we take in through choices we make. Humans are animals and, using evolutionary theory, you map out over time the abilities we possess that from a neuroscientific perspective are built into our brain.

How accurate is that?

Let’s start with animals and then work up to humans.

Any creature in order to remain alive has to take an active role in maintaining itself. This means seeking out favorable states, such as where it is fed and warm and not being eaten by some other animal.

To do that, in all living things, there is a feedback-control system. We sense when things are unfavorable and we move toward more favorable states. That’s an incredibly basic understanding, but it can be extended to behavior. Think of, say, temperature as a feedback-control system. If you're a reptile, one way to control temperature is to move to a place where the temperature is different, right? And that's in fact what reptiles have to do to stay alive.

The whole point of having a brain, frankly, is to generate outputs that modify your state of existence in a favorable way. We can better do that when we understand the contingencies between what we do and what the results are likely to be.

For example, and getting to humans now, think of a baby that’s hungry. The baby can’t move himself very well, but he can make a noise, and lo, food will appear! And that's because in the world of the baby, there are parents, and the parents will take care of whatever the babies needs are. The baby essentially is exerting control through the parents to modify his internal state in a favorable way. And it works because parents are typically very reliable. Do you have kids?

I do. I have one daughter.

I have one child too, a son, who’s been controlling us for 12 years and doing it very well. It's about producing the output that has a desirable result—that's what behavior is about, right? And I really don't think anyone would disagree with this, even the most staunch, classical cognitive scientist would not disagree. But the question then becomes, what are the consequences of that?

My claim is that it changes everything about how we understand the organization of the brain. I'm not the first to say that that because it's organized as a control system, it's not about building explicit representations of the world. I mean, those representations are useful, but they’re not really the fundamental underlying purpose of the brain. The real purpose is to establish control policies with respect to properties of the world.

In other words, if the world has certain dynamics, then the job of an animal is essentially to complement those dynamics in such a way that the end result from its interaction with the environment is one that leaves the animal in a better state.

I agree with the biological story you tell. When you say “internal representations” is not really what the brain is about, I agree that's true from a biological standpoint.

But the cultural account of cognitive development suggests that that internal representations are not only part of our story, but actually what make us unique. In your lectures you draw a big red X over the cognitive model, but I don’t see them in conflict.

Why am I wrong about that?

Because the reason for drawing the red X comes from largely from human data. Many scientists have looked at this and when you try to match up, say, neural imagining data from fMRI to the classic cognitive science taxonomy, it doesn’t have the explanatory power that it should if that model accurately described how the brain functions.

It is true that something extraordinary happens with humans and culture, and we develop new capabilities, such as the ability to abstract. My point is that you can’t understand this emergence if you don’t understand what it’s emerging from. And what it's emerging from really doesn't look like the classical cognitive science architecture, but rather more a sort of embodied cognition theory, an ecological psychology architecture.

Given the emphasis you have on this feedback loop of behavior and engagement with the physical world, I'm not sure where something like self-reflection fits in. Sometimes I just like to think about things.

How do you account for that?

Well, I can't account for everything. Maybe we're not ready yet to ask that question in a way that will lead us to an actual correct answer.

It’s a bit like the ancient Greeks, they were brilliant, right? And they had all kinds of brilliant theories…but they didn't know some things that we now know. So they were just were not ready to ask questions about the nature of the universe in a way that that we can today. It’s not that they lacked intelligence. It's just that they didn't have the basis to ask the questions correctly.

I think that there some questions about human cognition we’re just not ready to ask, even though we're really, really eager to know the answers. Though I agree with you it’s a fascinating question. I just don’t know if we’re ready for it.

Well here we diverge, and I think it’s because you look things through the biological lens, whereas I see things through the philosophically pragmatic lens of Richard Rorty and John Dewey. For them (and me), there’s no “right time” to inquire into the concept of self-reflection. The criterion they’d use instead would be, “is self-reflection a useful description or metaphor to invoke right now to help us understand ourselves”?

From that standpoint, intellectual development does not move in the same way that biological development does.

I think you're right about that. But I think science goes that way as well, right? We come up with constructs, which we then use to define research agendas and research programs. But if you don't have constructs right, it's going to be trouble. You’re going to go around in circles for a long time.

And I think that's what's been going on with cognitive science. It's remarkable to me how many people from all kinds of different directions are saying that essentially, we really need to rethink the conceptual toolbox of cognitive science.

The ideas of cognitive science are pretty old, and they were formulated at a time when we knew less than we do now. And the field also goes through these throw-the-baby-out-with-the-bath-water moments every several decades, where it’s forbidden to say “consciousness” or “behavior” or whatever.

But now along comes AI and gives the dying horse a booster shot. And it frustrates me a bit, because some of the metaphors of cognitive science have outlived their usefulness, but we may be stuck with them for another 20 years, and I’ll be 76 by then.

This is me feeling optimistic in this moment, probably because I'm stimulated by this rich conversation, but I genuinely think that AI presents an opportunity. We now have a tool that we can play with that loosely emulates our thinking. And I’m seeing people ponder things they probably never pondered before, such as, what do we mean by reasoning? What is understanding? What is consciousness?

Now everyone is wondering just what the hell's happening in the mind. That excites me.

Yes, but we've had those conversations. In fact, 50 years ago, we had those conversations, right? And conclusions have been made that may be correct, or they may be wrong, but people now are just unaware that we’ve already covered a lot of this ground.

I think the problem that we're having right now is we're asking these questions in the context of two very different things that do impressive stuff, humans and LLMs. We’re tying to answer the questions the basis of their similarities and differences.And I think it's a complete red herring, because their similarities are superficial and their differences are dramatic.

Again, we know what's under the hood! We know there are no transformer models in the brain. We know that young children do not learn by the devouring the entire Internet. We know these things already, yet we’ve got many people wondering if maybe magic happens, and the latent structure of language can create something like a human.

No, I don’t think so. That’s not what brains do. We know enough about the brain to know this is incorrect. And yet now we’re stuck debating a metaphor that’s incorrect.

Well I think it’s vital, not just for scientific reasons but also for sociocultural reasons, to have scientists sharing their skeptical takes on AI publicly. The hype is out of control, people really want to use AI to teach kids. And educators are being told this is “the future” and that resisting it makes them dinosaurs or luddites or whatever.

So I’m grateful that you're speaking out and just saying no.

Some people make the analogy to resisting calculators, right? Calculators are useful, but calculators also never make a mistake. If I knew that my calculator would be wrong one out of a hundred times, I would never use it.

I gotta tell you, if my students start sending me AI-driven essays, then I'm gonna have an AI do the grading. And we can all do something else with our time other than teaching and learning.

That's ultimately not what we want. The last place where we should be replacing humans with AI is education.

Thanks again to Paul Cisek for expanding my very human cognition!

Very Good! Thank you!

Consider checking out my posts about intelligence and language, for example,

https://alexandernaumenko.substack.com/p/opening-open-endedness

https://alexandernaumenko.substack.com/p/the-pointing-role-of-language

You may find those ideas related to what Paul discussed with you.

"the edge of their distribution" is my favorite new euphemism for the shallow bits/hallucinations

Great read!