A challenge to the cognitive model of the mind

Paul Cisek questions the dominant scientific paradigm and the current theory of artificial intelligence

If there’s hope in defeating irrational AI exuberance, it may lie with the neuroscientists.

Let me explain. Last year, I wrote not one but three essays related to the contention that human language is a tool for communicating our thoughts, but is separate and distinct from thought itself. Evelina Fedorenko, a neuroscientist at MIT and lead author of the paper laying out the empirical evidence for this claim, was even kind enough to let me interview her. Her basic argument is that we know language must be separate from thought because (a) people who lose language ability can still think and reason, and (b) different parts of the brain activate when we engage in different types of thought, and often the “language part” remains idle when we’re thinking. In my view, this evidence deals a serious blow to the hopes of achieving “artificial general intelligence” through the scaling of large-language models since, after all, they are language tools (it’s in the name).

Enter now stage left Dr. Paul Cisek, a neuroscientist at the University of Montreal, to throw some gasoline on that fire. Cisek first came across my radar last year when a pithy observation he made about LLMs started making the rounds on social media. You can read his full comment here, but to summarize:

We know that humans in general can falsely impute intelligence and agency to complex events that take place in the world, as we’ve seen humans do this in the past when interacting with a chatbot such as ELIZA, or claiming the gods make volcanoes explode.

But although modern-day LLMs are complex, researchers know quite a bit about how they function, through pattern-matching and use of mathematical theories (among other things).

Thus, although the public may be inclined to attribute sentience and agency to LLMs, scientists should know better. Cisek: “We are like a bunch of professional magicians, who know where all of the little strings and compartments are, and who know how we just redirected the audience’s attention to slip the card in our pocket…but then we are standing around backstage wondering, ‘Maybe there really is magic?’”

There isn’t any magic. But a big challenge we face is that the companies that produce LLMs are willfully trying to convince us otherwise, and are working to take advantage of the human impulse to ascribe agency to these tools. Yet, as Colin Fraser and Murray Shanahan argue, an LLM is better thought of as a role-playing character, a fictional entity that’s been trained to produce text that’s suggestive of certain personality traits, the same way an actor is trained to say and do things that’s suggestive of their character. LLMs, like actors, create the illusions of personhood.

Ok, so back to Cisek. This past weekend I found myself watching his lectures and reading his published research, including this video of a recent presentation wherein he suggests our dominant cognitive model of the mind is at odds with our evolutionary history. Further, he contends that because we’re using the wrong mental model to understand ourselves, any AI effort that over-relies on this (mistaken) mental model is bound to disappoint.

To get to his argument about AI, we’re going to first need to spend some time understanding his argument about human cognition. Fair warning, this is about to get technical, but hopefully it’s worth your time. The good news is that Cisek uses well-organized slides in his talks, as you’re about to see.

Let’s start with the existing scientific paradigm for human minds, the cognitive model. For the past, oh, 50 to 75-odd years, the dominant scientific model of what happens between our ears has been thought of as “information processing” or “computation.” The basic idea is that we take in some input from the environment; we process this input in part by drawing upon our existing knowledge (our memory); and then this ultimately leads to some output, some action we take. Like so:

Does that look familiar? It should, because it’s basically the same model of the mind that I use, the one I’ve shamelessly cribbed from cognitive scientist Dan Willingham, and sprinkled all over this Substack:

This is the simple model of the mind, but now let’s make it more complex. Cisek observes that that this model treats input-to-output as a function, in the classic mathematical sense—you know, f(x) = y, that sort of thing. What’s more, under this model, we can break apart (or “decompose”) our functional behavior into smaller mental subfunctions. If that’s too abstract, this should help:

Per this model, Cisek observes, we find the mind right there in the middle of input we perceive and actions we take:

It’s at this point in his talk that Cisek draws a big red X over this model and says it’s wrong. Or at least incomplete.

Why? Well, in Cisek’s view, we should stop conceiving of our behavior as a discrete input-output function but rather as something that is continuous, akin to a circuit where there is constant flow between ourselves and the world around us. Our brain, under this view, is the part of our body that continually controls our actions—a control system, in other words. We use this system—or perhaps, the system uses us—to adjust our position in the world, and thereby change the input we take in.

To make this more concrete, consider one of our most basic needs: Food. When we lack food, we feel something undesirable that we call hunger. Our hunger thus creates an “impetus” to move from our current state to the more desired state of being satiated. But how? Our behaviors are contingent upon the opportunities—or “affordances,” in Cisek’s terminology—that exist in the world around us. And if we don’t like what the world is offering up, and/or we think there’s a better option out there, we move. This is the micro-model he offers1:

Food is just one need animals have, of course—safety is another. One nice feature of Cisek’s model is that it allows for continuous, parallel processing of competing needs, an endless series of mental trade-offs that animals (including humans) must make when making decisions in the real world. In this sense, it’s a continous model of thought, rather than discrete input-output decisions.

Now’s a good time for a little break. Refill your coffee. Play Wordle. Ignore the news, the news is not good right now.

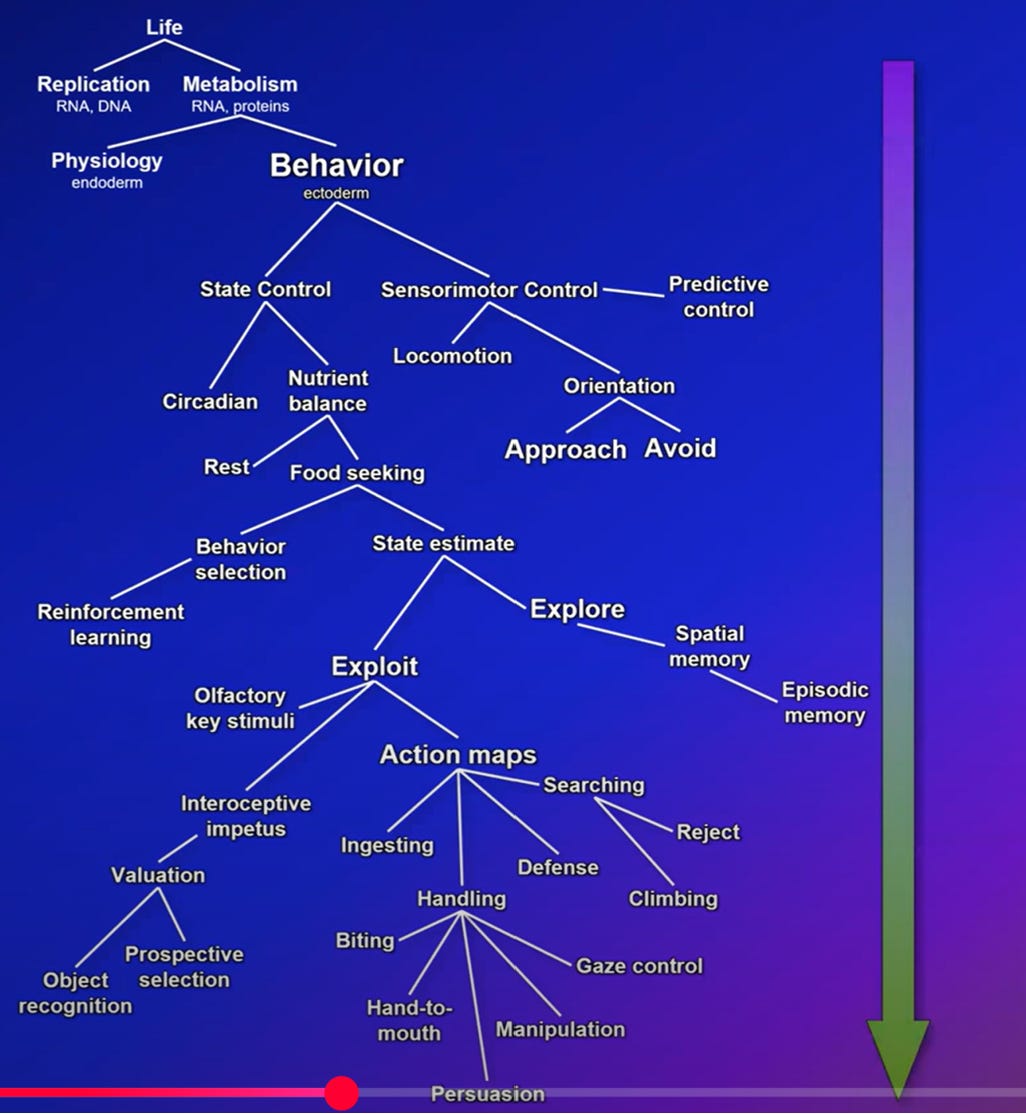

Ok, so. Cisek has this alternative model of the mind as a behavior control system—but what’s his evidence? Here’s where he embarks on a fascinating and comprehensive (albeit brisk) walk through millions of years of the biological evolution of brains. Things start to get very neuroscientific-y and beyond my rudimentary understanding of brain regions and the like, but the scientific story he’s telling is actually pretty simple: Over the past billion years, we see continuous emerence and elaboration of cognitive capacities emerge biologically that allow for a wider and deeper range of behavioral controls. Here comes some cognitive overload:

That’s a lot to throw at you in a single slide, I know—but trust me, in his lecture Cisek patiently explains how each of these stages changes how animals use their brains to control the inputs they receive from the environment. Again, I won’t pretend that I’m in the position to evaluate the underlying neuroscience he’s citing. The upshot, however, is that Cisek’s thesis produces a very different model of the mind from the current paradigm, one that looks like this:

That arrow on the right shows the progress of time and complexity in cognitive capabilities. In other words, as animals have evolved, we’ve added new behaviors that our brains control. What’s more, Cisek contends, the anatomical structure of brains aligns to these behaviors, in ways that make for a neat layering:

This is cool, right? I think it’s cool. Again, I am woefully ill-suited to evaluate the neuroscientific evidence he’s citing, but the scientific story Cisek tells thus far makes eminent sense to me. Biological evolution has surely shaped our brains as much as the rest of our bodies.

And so Cisek leaves us with this compare-and-contrast between his proposed model and that of “classic” cognitive science:

Maybe he’s right, maybe not—more on that momentarily. But let’s say he is. Where does that leave AI?

Implications of Cisek’s model of the mind for AI

Let’s recap Cisek’s main claims as I understand them:

The simple model of the mind as an information processor that takes input and produces output is mistaken.

We should instead see minds as control systems that guide behavior as part of a continuous process, like a circuit.

Over hundreds of millions of years, biological evolution has expanded the range and depth of behaviors that our minds can control.

If Cisek is right—we’ll come back to this very important if—but if he’s right, what are the implications for artificial intelligence? The main problem is that generative AI, at least in the form of large-language models that predict the next token, are mere input-output devices. What’s more, they are passive recipients of whatever text we choose to feed into them—they cannot move about the world, nor can they explore it of their own volition. They have no impetuses and they seek no affordances. They do not control their own behavior. We do.

Although Cisek doesn’t make this point in his lectures explicitly, I’d add, there’s also just the sheer question of time. Scroll back up and look at the evolutionary timescale we’re talking about—literally a billion years. The human mind simply cannot comprehend the vast range over which biological evolution occurs, at best we get a single century to experience the world around us. We developed computers within just this past century, and generative AI really in the last five. Do we really think that we can come close to emulating the product of this incomprehensible history by scraping all the data on the Internet and processing it using Nvida chips?

Of course, that is speculation on my part. But so too is the claim to the contrary, that we can design intelligence—and remember, there is no magic inside LLMs. At heart, I understand Cisek to be saying that things (animals) that are alive are the the product of evolution, which is to say, through sustained iteraction with the environment. If understanding that history is essential to understanding what our brain really is for, then we need perhaps to stop focusing on artificial intelligence and theorizing more about artificial life. As he puts it, we need to close the loop:

Last point. After spending 1,500 words explaining Cisek’s theory and ideas in detail, you might reasonably assume I’m tacitly endorsing his theory. Not entirely. I find it interesting and thought provoking for sure. But as I’ve written previously, I very much believe that cultural evolution plays a huge part in shaping human cognitive architecture. And at least thus far, I’ve yet to discover whether or how Cisek explains the radical cognitive leaps that have occurred to homo sapiens over the past 200,000 years, and the last 10,000 years in particular. Biological evolution is part of the human story, but it’s only one part. We cannot account for what makes humans such “peculiar animals,” to quote Cecilia Heyes, without taking into account our unique cultural practices.

When it comes to our minds, what’s the balance between the biological and the cultural? I’m hoping to return to this question soon. Maybe even next week. No promises.

As Cisek notes, this is not a new idea—that’s John Dewey he’s citing there. Later, Jean Piaget will pop up. And elsewhere I think I saw him citing Lev Vygotsky. That’s three of the biggest big-wigs of education psychology of the past 125 years. We’ll come back to the education implications of all this at some point, but not today.

The cognitive model you describe is Cartesian and it was subject to numerous philosophical critiques throughout the 20th century but without much apparent impact. Cartesian approaches in neuroscience, cognitive science, and AI trundle on despite having much in the way of credible responses to their various well-documented failings. Hubert Dreyfus has joked that cognitive science and AI bought a philosophical lemon just as philosophers were dumping it. But current discussions of AI make very little reference to these critiques. There appears to have been much more of a debate on these matters in the 1980s and 1990s. I am not sure why that is the case.

I had not encountered Paul Cisek's work until your post so I'm not sure what to make of it. I'd be interested to know more about how he connects his work to Dewey and Vygotsky. I have added a link to a paper by Rom Harre on Vygotsky and AI. The title is a bit misleading. It's one of several critiques he discusses. As you are citing neuroscientists as an antidote to irrational AI exuberance, another work, which I have not read, but is often cited in this context is Bennett and Hacker's book attacking Cartesianism in modern cognitive neuroscience.

Bennett, M. R., and P. M. S. Hacker. Philosophical Foundations of Neuroscience. 2nd edition. Wiley-Blackwell, 2022.

https://www.wiley.com/en-us/Philosophical+Foundations+of+Neuroscience%2C+2nd+Edition-p-9781119530978

Harré, Rom. “Vigotsky and Artificial Intelligence: What Could Cognitive Psychology Possibly Be About?” Midwest Studies in Philosophy 15, no. 1 (1990): 389–99. https://doi.org/10.1111/j.1475-4975.1990.tb00224.x.

Thanks for this pointer to Cisek. And for the Dewey footnote.

It sounds like Cisek is retelling the story of how the human mind works that you'll find in Principles of Psychology and in what gets called the Chicago School. Not the one with economists in the 1950s. The one with social scientists, including Dewey, in the 1890s.

The idea of a stream of consciousness and the notion that language is a process or technology that humans use to adapt to their environment were new, post-Darwinian ideas when James pulled it all together. Ben Breen, who writes at Res Obscura newsletter, is writing what sounds like an amazing book about how James's approach to studying the mind lost out to people like Galton, who prefer to measure things as precisely as possible and speculate from there.

As someone who would desperately love to see these ideas revived outside the weird group of historians and an even smaller group of philosophers who think about this stuff, I'm thrilled that there is someone I can point to who speaks the language of twenty-first neuroscience.