People thinking without speaking, part three

A conversation with MIT neuroscientist Ev Fedorenko

Language is primarily a tool for communication rather than thought. That’s the provocative title of a remarkable paper in Nature authored by Ev Fedorenko, a neuroscientist at MIT, along with her two co-authors Steven Piantadosi and Edward Gibson. In June, I wrote two essays exploring why I think this paper deals a near-fatal blow to the hope that scaling AI models will lead to artificial general intelligence. My gloss on Fedorenko’s argument sparked some interesting debate, and ultimately led to Ev graciously agreeing to let me interview her to explore her ideas in more detail. And now I’m happy to share her thoughts with you.

It was a stimulating conversation – and a long one. I’ve broken our conversation into six sections, so if you’re pressed for time, just scroll to the sections that look most interesting (but the whole conversation is worth reading).

Language is not thought – overview of the argument

Brain networks and our inner monologues

Implications for Artificial Intelligence

Cultural cognition and implications for education

Philosophical pushback

Talking to whales

1. Language is not thought – overview of the argument

So your paper advances two main arguments, one about thought, the other language. Starting with thought, you argue that it happens independently of language. I see your claim supported by two pillars. The first pillar is that we can look at the brain when we’re thinking, image it, and see different regions activated that are unrelated to the region we use to express language. This suggests that there are all sorts of aspects of thought that are independent of language.

Exactly.

The second pillar is that we can observe people who have lost their linguistic ability yet are still capable of doing cognitively demanding things. We can take language away, but this does not take thought away.

Yes, precisely.

Then, turning to language, my understanding of your claim is that we can look broadly at all human language and see certain commonalities in their structure. And these commonalities suggest that language is fundamentally designed to facilitate communication.

That's right.

2. Brain networks and our inner monologues

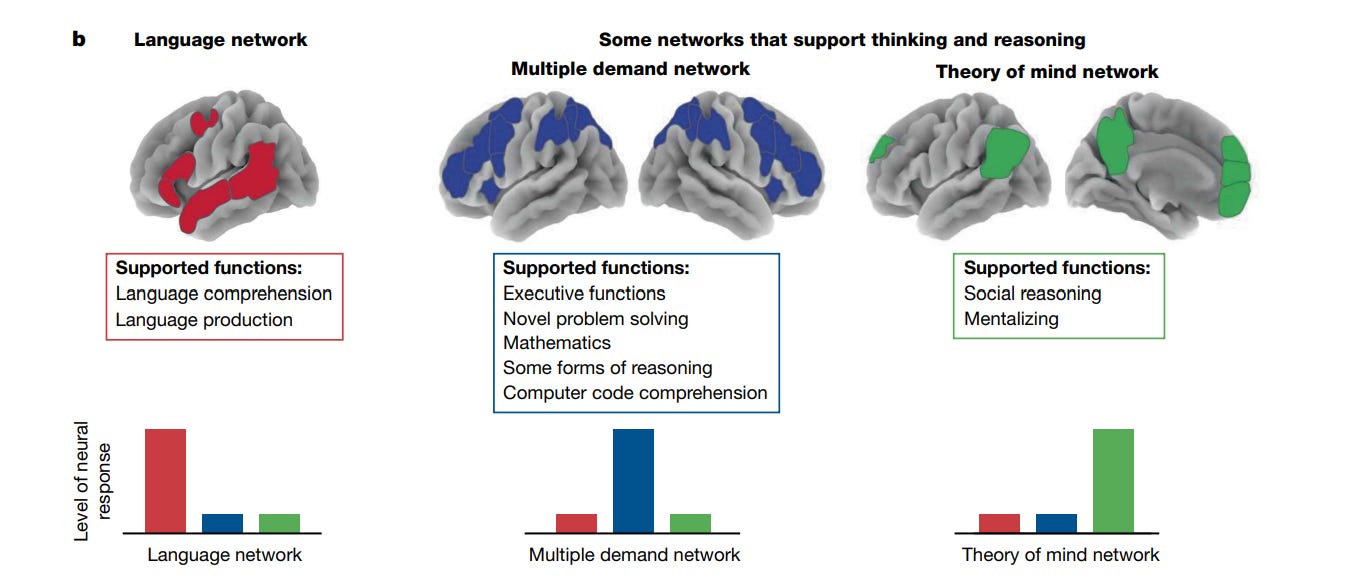

So let’s go back to your argument about thought. You have this graphic showing networks in our brain that support thinking and reasoning, and you identify by my count at least four of them. Language is a network. There's something called the multiple demand network. There's the theory of mind network. And then later I read something about the default network, which sounds to me like “other.”

So my questions around this are, are those all the networks? Are there more? How many networks are we talking about?

That's a great question. The ontology of thought is still a very active research program. Just in the last year and a half, our group found some brain areas that respond to particular aspects of logical reasoning that don't seem to be a part of the multiple demand network, which I had totally assumed would be. And another lab in our department found a region that seems to be quite selectively engaged in causal reasoning. So through careful experimentation, I feel like we may keep discovering new brain areas that support different aspects of thinking.

You can also approach this question from the behavioral standpoint, you could imagine devising a huge host of different kinds of tasks, administering them to a large number of people, and seeing how abilities group together.

Okay, so switching over to the language side of things, you're saying that language is not essential to thought. But you’d agree that everyone needs to learn to read, right, because we transmit quite a bit of cultural knowledge through linguistics?

Exactly. Of course, language is really useful, because we can write down what we have figured out, and then that can be passed along. Or, even before writing happened, knowledge was being passed on in oral traditions. That's why language is so powerful, because we can take any thought and convert it into a linguistic format and then store it and pass it on to future generations.

This is counterintuitive, because there are the people – I think you're one of them, and I know I’m one of them – where it feels as if language is running through our head as we think. Can you explain that?

The phenomenology of inner voice, or inner speech, is very hard to study. Because scientifically controlling how people think is just…tricky. That said, it seems like there's about 15 to 20% of people who just don't have any sensation of this inner voice. It's a similar proportion who don't engage in visual imagery at all.

3. Implications for Artificial Intelligence

Okay, so turn to the implications of your argument for AI. Because when I read your paper, my immediate thought was, you've dealt essentially a fatal blow to the claim that we will get to something like artificial general intelligence through the scaling up of large-language models. There's been this notion that if we just feed them more information and throw more Nvidia chips at them, that their capabilities will continue to increase. Do you have a view on that?

I don't think scaling will get you there all the way. To be honest, I have been incredibly impressed by how far linguistic statistics can take you. In some sense, it's not surprising, because we use language to express our views of the world, our inner and outer worlds. And so there's a lot of information packaged, reflected in language already, and especially if you use billions of tokens, there is a lot of information that can be extracted, structured, and mined in various ways.

But I just don't think predicting the next token is the right objective for thinking. It just seems like something is fundamentally missing. If you think about the distinction between [Dan Kahneman’s] “system one” and “system two” model of the mind, system-two processing seems fundamentally different from trying to predict immediate next states in the world. It's slow, it's deliberate, and it's very satisfying to get that level of structure to the representations you may have learned. This is what we call insight.

I'd be really surprised if scaling got you there. As I'm sure you're aware, the benchmarks for LLMs are not very good. The people who are evaluating these models are not always good experimentalists. And so there's all this hype where LLMs can do this or that. But then you go in and you do a very simple perturbation, and you break them very easily. So they lack the kind of robustness that humans have.

And so now many people are exploring neuro-symbolic approaches, where you basically build a system which is more like the human mind. You take an LLM, which is kind of like a decoder between thoughts and word sequences, and hook it up to something like a symbolic problem solver, and then see whether that kind of a system is more robust at, for example, solving verbal math problems. The big challenge, of course, is that the neuro-symbolic approach is necessarily more restricted to particular domains. It's very hard to create a generic thing that will do social reasoning and abstract formal reasoning and causal reasoning and all that. And so it ends up being a targeted system that solves a particular kind of problem. So, not AGI.

But I don't even know why people want AGI, honestly. Specialization and division of labor is a really useful construct. Why do you want to have a vacuum cleaner that will also talk to you about your problems?

It will warm my friend Gary Marcus's heart to hear you talk about the neuro-symbolic piece, because he's been adamantly making that case for a long time. My next question, which you’ve already anticipated, is that you and others have said that if we could create other modules and bolt them together with an LLM, we might have an AGI system.

How? I'm very impressed with how capable LLMs are, as you are, but it is not clear to me that we can just map that over to other domains. Many smart people in AI think we can. My question is, we have lots of linguistic data, but where do we get the other data? You have that great line in your paper, “brains don't fossilize,” and that suggests to me that we do not have this repository of these other forms of thinking in the same way that we do with language.

So I don't get it. What am I missing?

A positive take on the whole enterprise of trying to just scale things—more data, bigger models, or whatever—is if you get something that performs in ways that is comparable to humans with all the right controls, that's informative. This is another question that we also couldn't ask before: are there multiple ways to build intelligence? And so maybe neuro-symbolic is one way, but maybe there is this other way to do it.

My interest in building neuro-symbolic compositional systems has to do with my desire to ask questions about how the language system works with systems of thought and reasoning and knowledge. In humans, or even in animals, we don't have good tools for probing inter-system interactions, because you need high density neural recordings in multiple places, and that approach just doesn't exist yet.

And so these computational models could give you a handle on trying to understand what are the ways in which these different modules could get hooked up together, and what are the ways in which the representations from this noisy linguistic system could get translated into something more stable and robust. Then you could perform the relevant operations on them. That’s my interest, trying to understand how these different pieces work together. We don't have good tools for doing this in actual brains.

That will be a big deal, if you can do that!

4. Cultural cognition and implications for education

Ok, so at the very end of your paper, you nod to the importance of culture in the transmission of knowledge. And you cite my friend Celia Heyes's work around cognitive gadgets theory, that culture shapes our cognitive architecture. One of the concerns I have within the education domain is that so many people are excited about using AI as a tool for educating students, on a personalized, individualized level. This seems to me to sort of fundamentally misapprehend the social nature of how we learn from each other, and the uniqueness of our species in being able to develop these languages in order to communicate our thoughts to each other and across time.

I'm curious where you situate yourself within cultural cognition and the social aspect of learning?

A lot of learning is certainly very grounded in social interaction. Like most of what we learn, we learn through language as conveyed by others. We learn a lot from interactions with the world too, but most of what we know about the world comes in through language. It’s either told to us by parents and caretakers in the first few years, and then read through books or explained by teachers. Of course, in literate societies, once you have the ability to read, you don't need to have social interactions to learn, you can just learn from linguistic information directly, and you can do so on your own.

Well…you identify as one of the components of our neural architecture is our theory of mind capacity, our social reasoning, right? That feels somewhat fundamental to human beings. I see no way, shape, or form in which AI currently can develop a theory of mind, and I don't even see a theoretical way in which, just by scaling a large language model, it ever could. And so from that perspective, I worry greatly about using these tools to tutor children.

Yeah, that's very interesting. So the theory of mind abilities are different from abstract formal reasoning. They may share some of the same kinds of computations, but they're distinct systems, as I'm sure you've probably encountered from having math teachers who are actually not so good at understanding why their students don’t understand something. Higher education institutions are full of these people.

At some level, it has to be the case that a teacher who has a good theory of mind, and can understand why somebody may be confused, will be a better teacher. But even if someone is a poor teacher, they may be a brilliant mathematician. And in many cases, we can learn the relevant information about math and other domains from textbooks or whatever, without any social context.

But in the context of education, if you want to have these AI agents that tutor kids, it seems weird not to worry about them having some representation of the learner’s mind. I haven't spent enough time thinking about this. I imagine that there may be ways to program AI agents in ways where the agent would not have a theory of mind built in, but you would figure out ways for them to react to the learner’s confusion, but I'm not sure.

Part of the problem, particularly when you're dealing with students and children, is that their cognition is emerging. So their articulation of their thoughts is incomplete. I don’t think we have a really robust model of misconceptions, and it would be hard to capture data about how we misrepresent our own thoughts. I think that's a big mountain for LLMs to climb.

5. Philosophical pushback

But let’s turn to your enemies and opposition. You mentioned to me that some philosophers have issues with your claims about thought and language. That intrigues me, because I think you're actually affirming the major philosophical move of the last 100 years starting with Wittgenstein.

So I’m curious what the pushback is?

Historically, there have been a lot of people who have basically couched complex thinking in language. And I think a lot just boils down to people wanting a parsimonious story for how we're different from other animals. “We have language and we're smarter, therefore we're smarter because we have language”, but of course this doesn't follow.

And a lot of philosophy is based on introspection. Many people have that strong sensation of an inner voice running in their heads. And so combining those two things together, they conclude language is how we think. Dan Dennett, a very smart guy, thought it all kind of happens in language. And I just think, it's very hard to introspect about ways of thinking. Introspection is good for generating hypotheses, but it doesn’t constitute data per se.

But some people just don't care about data. Chomsky has never cared about data. He never changed his mind about anything in the face of data. But I don't see it as my job to get Chomsky to change his mind; I want to train the new generation of scientists, who not only think deeply but also understand the primacy of data.

Yes, that's absolutely true, but on the other hand, Richard Rorty’s big move was to say to philosophers that the goal of finding a language to describe the world that transcends any place or time…will never work. In his view, languages are just the way that we communicate our claims about the world to one another. To my mind, you have empirically and scientifically validated what Rorty was saying many years ago.

Steven Piantadosi (one of my co-authors on the Nature paper) and Jessica Cantlon have recently argued that what makes humans smarter than other animals is likely just an increase in processing capacity, and against the idea that there’s a “golden ticket” that makes us smarter, like language or social reasoning. Steve and I have also been talking recently about how natural language is actually a very poor format for thinking, and that's why people ended up inventing things like Boolean algebra and Venn diagrams, because these are much better ways to represent abstract, relational concepts. If you try to write these out in sentences, it's pretty clunky and imprecise.

6. Talking to whales

We’ve talked a lot about human and artificial thought, but I want to conclude with a question about animal thought. I’ve written that I’m hopeful AI will help us talk to whales, but deep down I don’t really think it will. What about you?

I just think there's a lot we don't know about animal communication. There was a really long period where people were like “chimps, they just grunt.” And then people started using fancy tools to unpack the information that's in those grunts, and it turns out there's a lot of information, and other chimps use that information. Our speech probably sound like grunts to them too.

But in general, I think modern AI tools hold a lot of promise for getting new insights into animal communication and cognition. Imagine a pod of whales, and we have them all implanted and record the signals they produce, and then you can use information about where they are, what the temperature of the water is, what social configurations they’re in when they make certain noises, and we can make cool inferences from that… That’s exactly what some researchers are now doing. It’s certainly not anything we could have done even a few years ago. So I think these tools are super exciting, and animals are super interesting – and we have a lot to learn from them.

I’m not like a human supremacist.

I’m grateful to Fedorenko for sharing her thoughts, and I hope you’ve enjoyed reading them. The Substacking wing of Cognitive Resonance will be on brief hiatus for the next two weeks due to travel.

So, I am wondering, what is gained by winning the debate over whether LLMs can achieve AGI or not? As far as I know, general intelligence is not defined, much less artificial general intelligence. It's kind of like arguing over the presence of a phantom.

Dangling the idea that "AGI" is just around the corner serves those who want to hype up investment and interest. So I can see how popping that balloon can save a lot of misspent money and energy. I can also see how refuting this path to AGI is a point for us meat sacks over the machines. It's an existential shot in the arm.

What matters to me, ultimately, is if the technology can be reliably useful without burning up the planet in the process. If defeating LLM=AGI puts the industry on a more sustainable path, I am all for it.

Why is this debate important to others?