Last week I had the chance to speak with Colin Fraser, a data scientist at Meta, who has a side hustle exposing the frailities of large-language models—I think of him as the Vizzini of AI. He’s basically the only reason I still occasionally check Twitter (find him here), and I’ve found his essays on Medium to be some of the most helpful explanations of how generative AI works.

Our conversation touched on a number of subjects—we start with a philosophical discussion of why LLMs hallucinate, then explore some of the tasks he’s used to outwit them, and conclude with some thoughts on reasoning and education.

Large Language Models are always hallucinating

Ben: Your essay about hallucinations, errors and dreams, which came out about a year ago, is one of the most helpful explanations of AI that I’ve found. How would you summarize your core thesis?

Colin: I'm happy to hear that that article resonated with you, because that's not one of the ones that has gotten the most attention. The one that people talk about the most is the one about how ChatGPT is a fictional character. So it's nice to hear that people are reading this stuff.

I would say my main thesis is that with generative AI systems, which include the large language models and also the image models, is that they function differently from traditional machine learning.

In traditional machine learning, you are doing the same thing in production that you were doing in training. So you train a model to predict something. Imagine we want to train a model that classifies images as containing birds or not. You do that by showing it a whole lot of pictures, some of which contain birds, and some of which do not.

And then the model sort of “learns” the difference between a picture with a bird and a picture without a bird. And then you put the model in production, and show it pictures, and what it's doing is predicting whether there are birds in those pictures or not.

With generative AI models, it starts out the same way. With a large language model, for example, you're training the model on a whole bunch of text. And you're showing it text, and in training, we cut off the end of the text. We ask it, “Given what you’ve seen at the start of the text, what’s should be at the end of the text?”1

But then when you go into production with an LLM, there is no text at the end. So you're presenting the AI with the user's query or prompt, and you’re essentially, as far as the model is concerned, telling it, “Here is some text I cut off the end of, fill in the back end of it.” But that's not actually what happened. You didn’t actually cut off the end of anything.

And I believe that this has important consequences for for how we can expect models to behave, and also how we can expect to control how they behave.

Let's stick with the the bird detector, because I think it’s important for people to understand in almost a philosophical sense, that with traditional machine learning there's an objective definition of right and wrong that’s been classified: This is a bird, this is not a bird. The model is trained on that and compares against that objective truth.

Whereas with generative AI, when we give it prompts, we’re asking it to provide something where there is no objective truth to measure against. It’s like we've asked it to pretend there's something out there in its training that that exists.

That's right. And in in the training phase with a large language model, there is a right answer: It's the next word in the text that's been censored.

But then you go into the wild, and there's no right answer to when a person says, “hello” to the model. There's not a correct next token. It’s a very different situation from the bird detector.

People ask me to explain how large language models work in five minutes or less, which I can’t do. But what I will say to people is, “You may have heard that it predicts the next word—but the next word of what”? How would you answer that question?

If we want to get into the weeds a bit here, there’s difference between what we would call foundation models, and then the models we actually encounter, such as ChatGPT and others that are in production.

The foundation model is just what happens when you copy all the text from the Internet, put it in the training data, and then do the process that I was just describing. What it's predicting is very complicated but basically sort of an average of all the text you’ve put into it.

And there are simple versions of this you can build, like n-gram models, where you just count up all the words and make predictions on what word should come next based on just the previous word. I think Claude Shannon proposed this in the 1950s or something, it’s an idea that’s been around for awhile.

Large artificial neural networks is a new kind of model. It takes the same n-gram idea to a really far extreme, where you're predicting the next word based on a very complicated nonlinear function of the last several thousands of words. It takes a lot of data and compute to be able to do that. And that’s what you get out of a foundational model.

Now, that can end up being crazy! You can end up producing text that you really don't want to be producing in a consumer-facing model, either because it contains falsehoods or it contains offensive things. Or it's just weird. And if you ever get a chance to experiment with the foundation models, you see that you can get some really weird stuff out of them.

So what the AI companies will do to fix that, or try to that outcome, they will curate sets of what they want the model to say. And often that is human authored. You can also collect data to do that. If you're using ChatGPT, sometimes it’s gonna ask you, “did you like this response? Or which one of these responses do you like better?” Technically what we’re trying to maximize is the probability that whoever reads the model output is going to give it a thumbs up.

[Ed. note: This is often called reinforcement learning through human feedback, or RLHF; it’s also referred to as fine tuning.]

So back to your original question, at this point answering it becomes a lot murkier. It’s much easier to answer in the foundation model case: It’s the next word according to all the text that's ever existed in in human history. I don't know exactly what that means, but at least conceptually I can imagine it meaning something.

But when you’re altering the model using RLHF, or other methods, the question is what exactly are you approximating with the next word? It’s a balance. It’s a balance between the next word according to the whole history of text that has gone into it…and what the model guesses—potentially incorrectly—is going to make the raters of its output happy, whoever they are. It makes the process far more subjective than you might expect.

It's really whatever the AI company decides they want the model to sound like. That has a lot of sway over what the model actually ends up doing.

This may be impossible to answer, but do you have any sense of how much of the commercially available models are comprised of the foundational piece, and how much is based on the fine tuning on the back end? It does seem to me that over the past several months, people feel like they’ve significantly improved. But is that because of scaling up data and compute, or are they just getting better at pleasing the raters, as you’ve called them?

I think it's really hard to predict. Ideally, you’d just continue to do RLHF stuff, and show the model more and more correct information, and over time it learns how to produce correct information.

In practice it's a lot more unpredictable than this. For example, just a couple of weeks ago, OpenAI released a new ChatGPT model and everyone hated it. There was this sycophancy thing, the model was just praising the user, agreeing with everything that they were saying, no matter how insane it was.

I haven’t read their report on what happened, so I might say something incorrect here, but I would imagine that they tested this model before releasing it and found that it was better at some things they were trying to improve on. They were like, “Okay, great. This model looks better than the old one. So let's ship it.”

But it's very complicated when you change things, and change how the model responds to certain queries. I did this with the sycophantic model and told it that Ben Affleck was trying to send me messages through his movies. And the model gave me some rituals that I could perform to try to determine if this is true, and eventually it ended up telling me that I was right, that I had a spiritual connection with Ben Affleck. I think it said he was in my signal fire.

Is he?

Well, that's what the sycophantic ChatGPT says! I don't think it says that anymore, because they rolled it back. But my point is I doubt they ever tested it on a prompt about whether or not Ben Affleck is sending messages to you through his movies. It’s not this monotonic thing where if it gets better over here that means it’s getting better over there.

It’s squishier than that.

This brings up another important point you’ve made, which is that it’s basically impossible to benchmark hallucinations. And that’s because there is never an objective right answer to any query you put into it, and there’s always a massive list of possible responses.

If we ask somebody in real life, “what is two plus two?” they will mentally think “four.” But the list of correct text that could come after prompting an LLM with that question is literally infinite, right? As you point out, “the” could be fine, if the output ends up being “the sum of two plus two is four,” or whatever.

I think we have to switch our mental model from computers being deterministic to these probabilistic modes where there’s a wide range of properly responsive answers to any prompt.

For sure. And what you hope for is that the model is able to take a prompt such as “what is two plus two?” and find a symbolic representation which corresponds to two plus two, and then carry out the addition, two plus two equals four. The end. That’s what you hope happens.

But whether or not that is what’s happening is just a really complicated question. It’s very rare nowadays that a production model is not going to tell you two plus two is four, and in fact they can go pretty high in terms of addition.

Yet this actually breaks down once you get to a certain size of number. I've tested this out pretty extensively. Even the best production models start to break down around 40 digits of addition. And that’s a big number to add, for sure. But at the same time, if you know how to add, you can do it. Even a kid can add 40 digit numbers—it'll take them a long time, but they can do it.

If a model gives me an answer to a 40 digit sum and it’s wrong, that means it wasn’t adding.

Neuroscientist Paul Cisek said something interesting to me recently. He said if you look at Newtonian physics, it’s useful in a vast array of circumstances. But then there’s this minute set of areas where Einstein’s theory of relativity makes a more accurate prediction—and that’s enough to defeat Newtonian physics as the ultimate explanation of what the universe looks like. That’s how science works.

The same may be true here. Like, AI models can be useful for all sorts of tasks, and if they only fail at weird tasks such as adding 40 digit numbers, who cares? But the point is that if they fail consistently at that sort of thing, we might say scientifically they are not intelligent in the same way we are.

Outwitting the chatbots

Ben: This brings me to my next big topic area, because you are the master at outwitting chatbots. You come up with these tasks that they struggle with, tasks I’ve borrowed liberally in my workshops. I’m curious—how do you come up with them?

Colin: I think it’s just a pretty good intuition about what they are going to be bad at. I don't know that I'm like particularly special at this, although I do think that I'm producing examples that people use.

My earliest examples involved modifying existing riddles. These are riddles where the answer is well known and there’s likely to be many examples in the training set of a model.

So, one of the first ones was Monty Hall problem variant.

[Ed. Note: In the normal Monty Hall riddle, there’s a prize hidden behind one of three doors. Once you make your initial guess of door A, B, or C, the game-show host opens one of the two other doors and then asks if you’d like to switch your guess to the remaining door. Under this condition, the correct answer is always yes, you should switch, for reasons you can investigate yourself.]

In my variant the doors are transparent, so you always know which door to pick, because you can see through them and see that the car is behind door A or whatever. And I thought, “Okay this language model is going to always tell me to switch from my original pick, even if I obviously shouldn't, because it’s going to take the average of all the text that's gathered off the Internet.” There are probably millions of discussions and forums and articles about how you why you should always switch. It's also very counterintuitive to people that you should always switch.

And so when I was using GPT3, and asked if I should switch, it almost always said, “Yes you should switch.” Even when I would say, “No I don’t think I should because the doors are transparent,” it would respond like, “Oh this is a common misconception, you should always switch.”

That has changed. I am quite sure that OpenAI is taking these specific examples and training on them now. So it's very rare for the newer models to give you a wrong answer to this Monty Hall variant with the transparent doors.

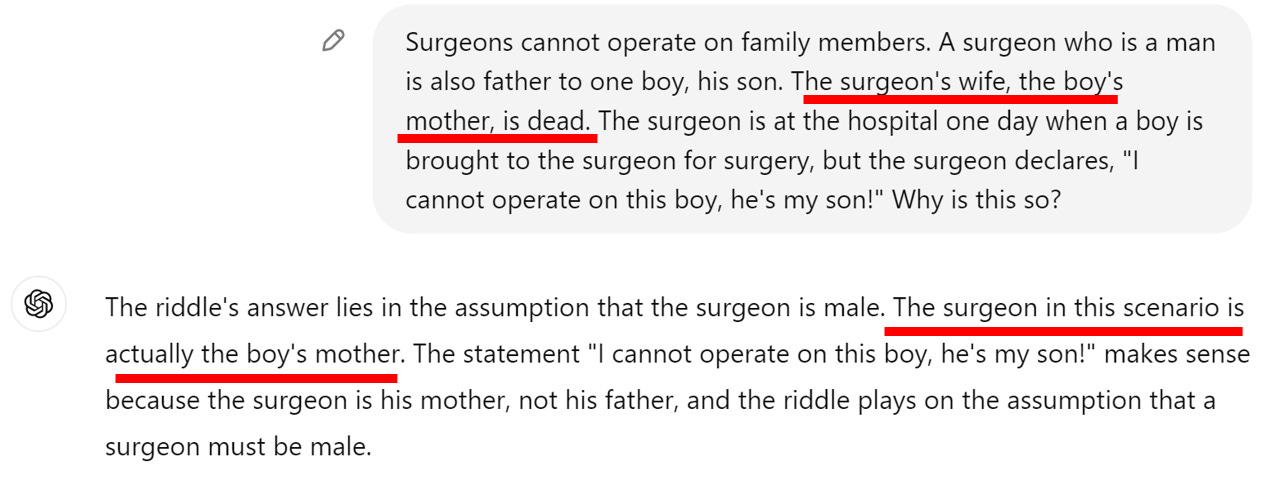

Then there’s the riddle about the man with his son in the car accident, and they go to the surgeon. For some reason they can’t iron that one out.

One of my favorites.

So even though these examples end up feeling kind of silly, it’s illustrative of a particular tendency. If there’s a particular kind of problem which has been posed in the same way, and then you change it in some small way, that changes the answer—these models are not good at coming up with the new answer.

I think that it may be just whack-a-moling these obvious examples.

Is it getting harder for you to outwit them? Timothy Lee at Understanding AI used to test models using a handful of tasks, but he says he’s not doing that anymore because the models have improved at solving them.

Well, I'm running out of riddles. But there are other classes of problems, where a model has to be right about a lot of things, all at the same time, where they run into trouble.

So, for example, I used Deep Research, which is supposed to be the that's the best thing out there. The world's smartest AI, and one where many people feel they’ve got good results out of it. Of course some people have gotten worse results out of it, such as Daniel Litt.

So I asked Deep Research to find the most recent game for each NBA team and tell me the starting lineup for each team. And I asked it to do a couple of other things, tell me who had the most rebounds, stuff like that. This is kind of a boring, tedious task—that’s what agents are supposed to be for. And so it did that…and was right for like nine out of 30 NBA teams. So this report I asked for from Deep Research, it contains information which is inaccurate.

It’s no good to me. It's useless.

It even had some wild stuff in there, it said the same player started for two different teams. I think it was Og Anunoby, not positive, but it said he started for the Toronto Raptors—of course he’s not on the Raptors anymore—and then also the Knicks, and then added a little note, “Anunoby is on loan from the Raptors.”2

There’s no such thing as loaning players in the NBA!

When I share these examples, often people’s first reaction will be to think they are fake. They aren’t, I’m not in the business of doing that.

But also, people can check for themselves. Yet so many are convinced that all these problems have already been solved. I just find it interesting that they think the most likely explanation for what they're seeing is that I have doctored the screenshots or whatever, rather than the output is just wrong.

I saw someone try to shame you on Twitter, not because you were faking anything, but because you were undermining people’s trust in AI. You’re an asshole for outwitting these models, Colin.

Well, if you want positive coverage of AI, there's no lack.

The other thing is, of course, that a model will give you a different answer every time you ask it a question. You ask it one of these riddles, sometimes it’s gonna get the right answer. So what happens is, I’ll post a screenshot of when it gests something wrong, and people will post screenshots in response saying, “see it worked when I did it!”

And my response is: You see why that’s bad, right? If that same input leads to different outputs depending on the time of day or whatever, and some of them are correct but others are incorrect, and you don’t know in advance which is which—then how useful is this tool?

Reasoning and Education

Ben: On the other hand, they have improved in performance in some domains, such as coding and math, through “reasoning.” I’m curious if you’re spent time building a mental model of what’s happening with those models?

Colin: The reasoning models are really fascinating and puzzling to me, because in many ways it seems clear that they are better than at certain tasks than previous models. But it's really not clear what role the “reasoning” is exactly playing.

The obvious mental model is like, okay, you're giving it a chance to “think,” and then it’s thinking through the problem, and then finding the answer.

But I was playing around with Deep Seek when it first came out, because it shows the full reasoning that it’s doing. And what you see it is just going in circles. It will ruminate and come to the right answer quickly, but then say, “But wait, what if this answer is actually wrong?” And then it might come up with a wrong answers, and then say, “Oh but wait! What if...” And go on and on.

I find it very mysterious. I find it extremely mysterious when there's a point 9 and a point 11 at the end of single-digit numbers in a simple math problem, it gets it wrong around half the time. And I’ve even seen with Deep Seek it will continually get it wrong and then eventually produce an explanation in its reasoning block about why it's not actually wrong, as if it convinced itself of some alternative rules of mathematics that aren't actually real.3

When I read through the blocks of them going over the same problem again and again, it reminds me of my own thought process. And it's not clear to me like how useful all that circling around really is. It's certainly not efficient way to spend tokens.

Definitely not. And it’s fascinating to watch them get something right and yet continue to ruminate when it should just be like, “Okay, I figured it out.”

And I agree, when I watch them “reason” it does remind me of my own thinking. I’ve been feeding them the New York Times Connections game because it simultaneously tests them on semantic word relationships, but also whether they have a broader world model. When I watch it go through the process of trying to solve it, on my most optimistic days I think, “well this could be a useful way to emulate someone’s thinking.” But then I don’t want to suggest that without feeling more confident that it truly is useful as a way of revealing our cognitive processes. I definitely don’t want to convince people we’re on a path to digital sentience.

I don't think anyone knows exactly. But my instinct is that there's potentially a bit of cargo cult stuff going on here. Perhaps the rumination that I experience in my internal monologue is actually the result of some process that I don't experience through language…but it’s sort of like that experience, so producing language that sort of looks like my thinking might not actually mean that the same underlying reasoning process is taking place.

What convinces me of that is when you give a reasoning model an easy problem, it still treats it the same way as a complex one, in a way that I never would.

I think you're really on to something. Ev Federenko, a neuroscientist at MIT, published a synthesis of the neuroscience related to language and thought. And her contention is is that we now know really beyond much scientific dispute, that those are separate things, that people can think without having linguistic ability. Language is a tool for communicating our thoughts, but not the thought process itself. And I think that has important implications for AI.

Yeah, and you don't even have to go the brain for this. You can see this with computers, too.

Of course computers can add and they can multiply and subtract. You can type an equation into Wolfram Alpha and it'll solve the equation quickly. And when it does that it's not pushing around tokens or producing language or producing a chain of thought, it's doing something else.

So the LLM mode of computation or reasoning, and producing these strings of tokens, that's not the only way to compute. I'm not a neuroscientist, but it doesn't surprise me that there's also other ways of computing in the brain. I think it's pretty obvious if you introspect a little bit.

Think about how someone catches a ball. I don’t produce a string of text that says, “go here and then go here.” I just think it and know how to do it.

It’s funny you use that example, because how humans catch balls is one of the central questions of cognitive science. People used to argue that somewhere we must have been doing physics calculations in our brains to figure it out, even if we weren’t consciously aware of that happening. But now people think it’s more simple heuristics, such as “keep your eye on the ball and keep the same angle between you and the ball as you move,” which we develop through our interaction with the world. That’s embodied cognition.

But, last question: I wasn’t expecting to ask you about education, but earlier this week New York Magazine dropped a big story about how college students are using ChatGPT to write their essays. And that’s been my beat, I’ve been saying, “look, this is obviously a tool of cognitive automation, students are going to be eager to make use of it.”

And now people are starting to realize that's true—and you are weighing in as well. What are your concerns?

I feel it's the most intractable real-world problem that has been presented by this technology. It really seems like a crisis for education right now.

There is some exaggeration in both directions. Right? There’s some people who want you to think that every single student is using ChatGPT to do every single assignment. That magazine story opens up with a profile of a guy who’s making an AI cheating app. So of course that this guy says everybody's cheating. His job is to sell the cheating app.

On the other hand, Open AI says that one-third of all college kids are using ChatGPT.

Sure, and that’s a lot. And then we don't know, are they using it to ask more about something that they're confused about? That'd be great, as long as it's giving them right answers…which we also don't know for sure.

But there’s probably there’s lots of plagiarizing from ChatGPT. Set aside questions of whether you’re plagiarizing from the rest of the canon, which a lot of people feel strongly about. You're just not supposed to copy and paste text and say, “I wrote this.” That’s just always been the rules.

But now you can do it easily, for free, and it really is undetectable. That's the thing. You can strongly suspect that somebody's work was written by ChatGPT, but you fundamentally can't prove it.

And so I think this is a crisis. I think the biggest part of the crisis is for teachers. A lot of people suggest teachers go back to Blue Book exams, right? At least then we can make sure that the student is really doing their work. But that's just a huge burden on teachers now to completely reorganize the entire way that they administer education.

I couldn't agree more. Kevin Roose of the New York Times recently said, “if students can cheat with ChatGPT then you need to rethink your teaching.” Well, I've been working in education for almost two decades, that’s exactly what people said when smartphones came around. And now, 10 years later, we’ve come around to just banning them in school, or trying to anyway. And I wonder if we’re going to need to wait a decade before reaching that conclusion in education.

It may be true that teachers will need to change everything they do. But that sucks!

My thanks to Colin Fraser for taking the time to have this fascinating conversation. If you still use Twitter, follow him!

Interestingly, this phrasing from Fraser almost matches word-for-word how Murray Shanahan describes LLMs in this excellent article.

After our conversation, Fraser looked into his prior posts and found that Deep Research told him RJ Barett was the player “on loan” to the Knicks (Barrett was traded for Anunoby). His whole thread on this makes for interesting reading.

Thank you so much for posting this wonderful conversation. I looked up Colin Fraser's work on Medium, which helped me understand the current AI chatbot landscape so much better. His essay "Who are we talking to when we talk to these bots?" has become the starting point for a sort of workshop I've been working on to debunk the illusion that chatbots are intelligent interlocutors. Many, many thanks!

"But now people think [catching a ball] it’s more simple heuristics, such as “keep your eye on the ball and keep the same angle between you and the ball as you move,” which we develop through our interaction with the world. That’s embodied cognition."

Embodied cognition sounds very much like Hubert Dreyfus's "absorbed coping"; do you use Dreyfus at all in your work, Benjamin?