Here’s what I’ve found interesting lately:

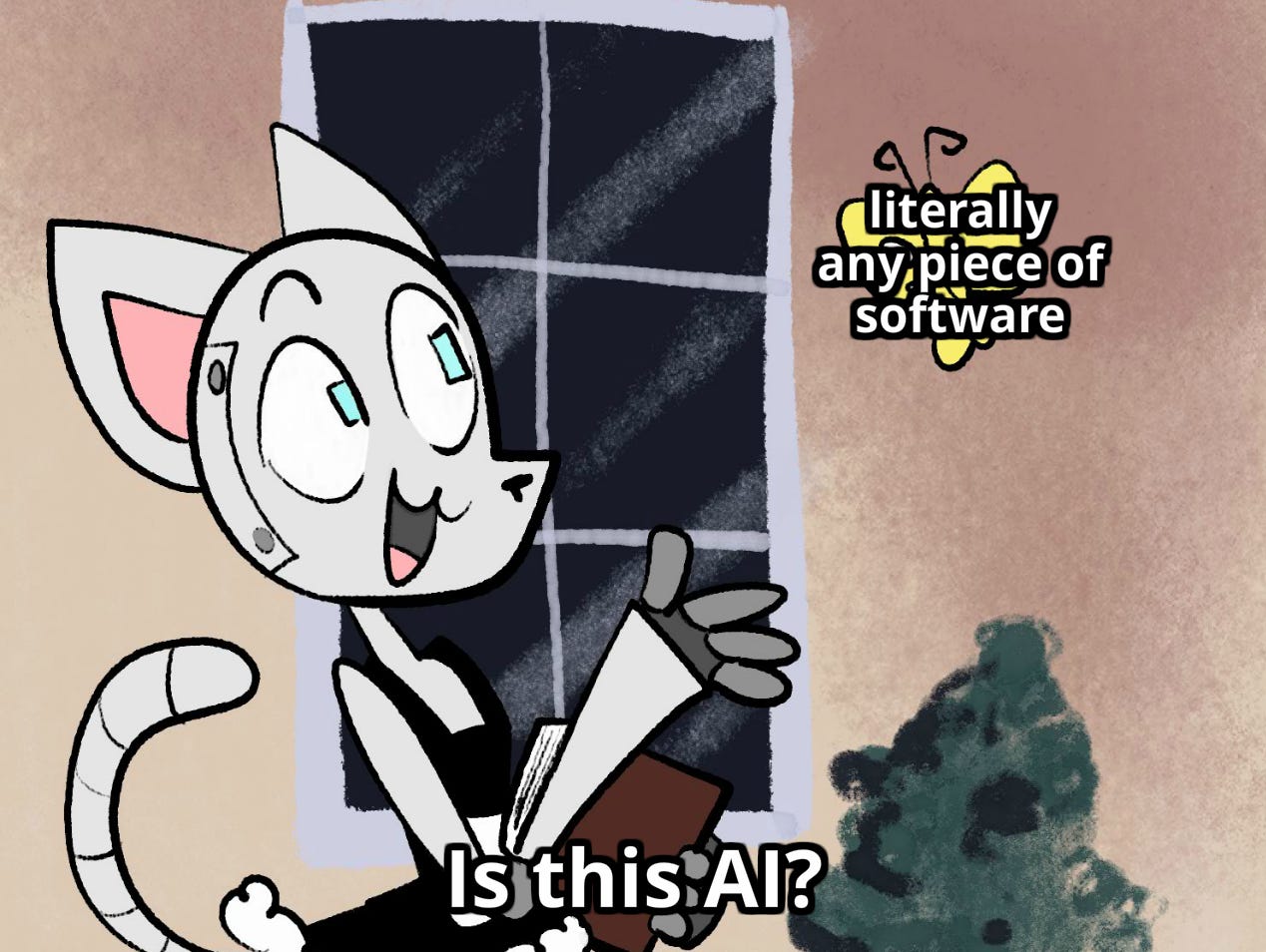

Nicky Case makes shtuff for curious and playful folks, and last week she dropped her guide to AI Safety for Fleshy Humans. I say “guide” but really it’s like an interactive comic mixed with academic research paper combined with cognitive-science inspired flash cards, oh and it also happens to be laugh-out-loud funny – in short, it’s brilliant. If I were to design a university devoted to helping people understand cognitive science, I’d throw oodles of money at Nicky to be lead curriculum designer. Here’s just one helpful graphic that I’ll soon be using in my presentations and workshops:

Seriously, check out the entire thing, I promise you won’t be disappointed.

Melanie Mitchell of the Santa Fe Institute is one of my favorite scientists who examines the similarities and differences between human cognition and AI – her Substack is the graduate-level version of my high-school musings here. Last week she got annoyed when Nature proclaimed that LLMs have surpassed humans on a medley of capabilities, including visual reasoning, reading comprehension, and mathematics. Key quote: “AI surpassing humans on a benchmark that is named after a general ability is not the same as AI surpassing humans on that general ability. Just because a benchmark has ‘reading comprehension’ in its name doesn’t mean that it tests general reading comprehension.” Preach. The challenge of figuring out how to benchmark AI capabilities is verrrrrry similar to the challenge of assessing what kids have learned.

Tutor chatbots are a bad idea, but what about using chatbots to help people become tutors and teachers? The Relay Graduate School of Education, one of the most innovative teacher-preparation programs in the US, is giving it a go. Mayme Hostetter, Relay’s CEO, is one of the sharpest thinkers in education—we’ve been working together for more than a decade—and wheels are in motion for us to debate over the summer on the role of AI in education. Just one problem, after speaking with her last week, it seems we…basically agree on everything. Relay is using AI to provide teachers-in-training with relatively simple, no-stakes opportunities to practice specific activities before going into the classroom. They are starting small, testing it empirically, and Mayme at least is under no illusions about the limitations of the reasoning powers of LLMs. This is how to do it.

At the ASU+GSV conference a few weeks people kept asking me, “did you see Dan Meyer’s keynote?” Well I have now, as it’s posted online, and it’s a banger. Among other things Dan argues that AI can neither solve the first mile of teaching (forming a meaningful relationship with students) nor the last (providing teachers with the specific things they need to support student learning). I don’t anticipate AI ever being good at the former, but on the latter, I can at least imagine a tool that combines AI’s natural-language prompting with something that produces specific tasks or other resources to educators. Perplexity, but for education. Building this would be hard but if you’re an entrepreneur or venture capitalist interested in making it happen, let’s talk.

Greg Toppo of The74 was kind enough to interview me last week to get my extended thoughts on AI in education, and I’ll share whatever emerges when it runs. Interestingly, Greg has long been thinking about the role that games and puzzles might play in motivating students to learn. Somewhat relatedly, I’ll be writing something here soon about one game in particular that helps expose the power of human cognition and the relative limits of LLMs.

If you still use Twitter (I know, I know), you need to be following Chompa Bupe, an entrepreneur and autodidact living in Zambia who frequently calls out some of the more egregious misstatements about what AI can do, especially in comparison to humans and animals. Can we look at the output of LLMs and reverse engineer from there to impute that LLMs have some form of cognitive understanding? Of course not, just as “there is no way in hell you can figure out” how a computer works by looking “keyboard presses & how the computer responds on screen…The model never really thinks about anything it outputs.”

Sean Trott wrote an excellent explainer of how “tokenization” works within LLM models. To humans, racket is one word — to an LLM, “rack” and “##et.” This actually matters mores than you might think when it comes to understanding how LLMs work, and also why they’re bad at math. (I’ve been having an ongoing conversation with Sean about how to understand AI, the first two parts are here and here if you missed ‘em.)

Ok, just one note of cognitive dissonance – this f’ing guy.

I’m heading to the University of Tennessee this week for another round of workshops with teacher-educators who are curious about whether and how to use AI to prepare future teachers. I’ll be posting some reflections on what I’m learning through this work soon.