What if today's LLMs are as good as it gets?

The broader world starts to realize scale may not be all we need

Look, I called my shot.

Back in April, I participated in a debate at the ASU+GSV Summit, the huge ed-tech conference that hosted a special three-day event devoted to AI in education. Shortly afterward, in this very Substack, I wrote the following:

Perhaps my spiciest debate take is that, for all the frothy hype surrounding AI in education at the conference, it felt somewhat…behind the times. In my view, there’s a growing awareness among prominent AI scientists that large language models have real deficiencies that aren’t easily solvable. For example, Yann LeCun, Meta’s global head of AI research (and a Turing Award winner for his work on developing neural networks), says that LLMs “suck” because they cannot reason, cannot plan, lack a world model, and cannot be fixed without a major redesign. As I argued, it’s fine to disagree with him (and me), but if you do, is that because you’ve grappled with the underlying science and come out on the other side…or because you just really want this technology to succeed?

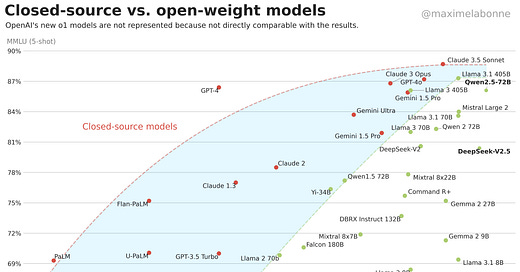

Well, well, WELL. Last week, the broader world caught up to what many in the scientific AI community have been saying for some time now: scaling up LLMs with more data and computing power is not—repeat, not—continuing to drive exponential improvement. Nor is it going to lead to them developing human-level intelligence. What’s that phrase about “gradually, then all at once”? This is what it looks like:

The Information: OpenAI shifts strategy as rate of ‘GPT’ AI improvements slows

Reuters: OpenAI and others seek new path to smarter AI as current methods hit limitations

Bloomberg: OpenAI, Google and Anthropic are struggling to build more advanced AI

Big Technology Podcast: Is Generative AI plateauing?

Benzinga (?): AI capabilities plateauing, say Andreessen Horowitz founders, echoing concerns of OpenAI co-founder Ilya Sutskever

Let’s note just how far things have traveled, intellectually and scientifically, in a very short period of time. Way back in April 2023, Sam Bowman, who heads a research division at Anthropic, published a widely circulated research article titled “Eight Things to Know About Large-Language Models.” His first claim regarding LLMs was that their capabilities would continue to reliably and dramatically improve as they “scaled” in size. Bigger means better:

Scaling laws allow us to precisely predict some coarse-but-useful measures of how capable future models will be as we scale them up along three dimensions: the amount of data they are fed, their size (measured in parameters), and the amount of computation used to train them (measured in FLOPs)….Our ability to make this kind of precise prediction is unusual in the history of software and unusual even in the history of modern AI research. It is also a powerful tool for driving investment since it allows R&D teams to propose model-training projects costing many millions of dollars, with reasonable confidence that these projects will succeed at producing economically valuable systems.

Bowman wasn’t crazy to make this claim. At the time, the steep rise in these models’ performance on various tests and benchmarks hinted that exponential growth in capabilities was possible. The phrase “scale is all you need” became commonplace in the AI discourse. Brave new worlds loomed.

Yet, while was happening, cognitive scientists such as Gary Marcus and Melanie Mitchell—to name only two—were loudly warning that, as a theoretical matter, this performance improvement was more of an illusion of intelligence, a deceptive chimera that belied the inability of LLMs to generalize, reason, or form abstractions the way humans can. Other scientists, such as Arvind Narayanan and Sayash Kapoor, later argued that scaling trends were also unreliable for economic reasons, as eventually all machine-learning systems offer diminishing returns on investment. Plus, there was lil 'ol me, arguing here in this very Substack, “the entirety of the world that surrounds us cannot be reduced to training data. The data sets that AI systems use are limited and finite—but the world is not.”

And now here we are. All these theoretical problems with scaling LLMs appear to be bearing out empirically. I wouldn’t say the AI bubble has burst, but when Marc “Every child will have an AI tutor that is infinitely patient, infinitely compassionate, infinitely knowledgeable, infinitely helpful” Andreessen is publicly acknowledging that existing models are topping out in capabilities…it’s a significant shift in tone. Likewise, when Ilya Sutskever—who is as responsible as anyone for the tech breakthroughs that underlie LLMs—says the scaling era is over and we’re entering a renewed age of “wonder and discovery,” well, it suggests we’re gonna need some new wonderous discoveries, and it’s not at all clear how easy they will be to come by.

Of course, caveats apply. First, despite the flurry of news stories last week, it’s still possible the next wave of models will knock our socks off. Sam Altman tweeted last week that “there is no wall” of model performance and, you know, maybe he’s telling the truth (cough). Second, even if scaling alone isn’t the cure-all, there are still very smart scientists investigating how to develop human-like reasoning abilities in LLMs. Yoshua Bengio, for example, is another Turing Award-winning cognitive scientist who acknowledges that these models are incapable of “causal discovery and causal reasoning.” From what I can tell, he’s hard at work trying to statistically model how we humans make causal inferences, and should he succeed, well, that would be a big deal. Similarly, cognitive scientist Sean Trott has alerted me to scientific efforts to develop artificial intelligence using “baby” language models rather than large ones. This too could prove interesting.

We’ll just have to see! This is why science is cool—new roadblocks lead to new theories, which lead to new research efforts, and so on. That said, when academic AI influencers such as Ethan Mollick say, “today’s AI is the worst you’ll ever use,” we should think critically about this claim. No doubt we’ll see some improvements in the next generation of LLMs, but if the overall curve of capabilities is slowing way down, then what?

What if today’s generative AI is basically as good as it’s going to get?

One final point. If scaling is indeed dead, I hope speculation about “p(doom)” also dies along with it. Occasionally people ask me what I think the likelihood is that these models might bring about the destruction of humanity along the lines that Eliezer Yudowsky and others like to warn about. My answer? Zero. Zip. Nilch. Nada. Honestly, I find the notion that these tools will destroy humanity as just as ludicrous as techno-optimistic delusions that they’ll make us immortal. They can still be quite dangerous and used for nefarious purposes, of course—that’s already happening—but they aren’t becoming self-aware like Skynet in the Terminator movies.

Pay attention to the skeptical scientists who are doing the important work of demystifing generative AI, and you’ll sleep easier at night. I certainly do!

Reading you and the algorithm bridge is close to the enlightenment

If this is the best we get from transformer-based AI models, then maybe all the teachers who have simply ignored the hype and hyperventilating were the smart ones. The real losers are Chegg and the low-paid contract workers in Kenya and India who were writing papers for rich students. And, I suppose, investors who bought at the top.

Educators are left with the same problem we had before ChatGPT and Khanmigo in that most students are not particularly interested in doing the cultural work teachers assign them. Personalized tutors were never going to be the answer to that problem.

The impetus to change how we approach the teaching of writing and reading remains. Thanks to ChatGPT we couldn't expect the elephant to stay quiet while we went about teaching as usual while arranging our desks around its bulk. Maybe now, we can?

In any case, my big question remains. Is there anything in this cultural technology that has educational value?