Something wicked this way comes

Big Tech CEOs, education policymakers, and universities continue to push AI upon students

I planned this week to write a wonky overview of a newly published journal article suggesting a research agenda for neurosymbolic AI in the age of large-language models. Fun, right? But I’m afraid we can’t have nice things here at Cognitive Resonance, because people keep saying and doing dumb shit involving AI in education, and I must rant about it.

My irritating week started with this New York Times op-ed written by Eric Schmidt, the former Google CEO, and his colleague Selina Xu. Fun fact: Schmidt’s philanthropic enterprise, Schmidt Futures, is where many former Obama officials landed after that Administration ended—the former executive director Tom Kalil and Kumar Garg both worked within White House Office of Science and Technology Policy. Kalil and Garg have since left to create “Renaissance Philanthropy,” which “aims to recruit high-net-worth individuals, families, and foundations to fund transformational science, technology, and innovation projects,” according to something hilariously called Worth.com. It’s all a continuation of the LinkedIn-Abundancy techno-agenda that the Democratic party is determined to cling to as, you know, a military occupation of our nation’s capital unfolds, people are being forcibly disappeared, Texas legislators are being held hostage, etc etc.

But I digress. Returning to the Schmidt-Xu essay, I was encouraged at first by the headline, “Silicon Valley Is Drifting Out of Touch With the Rest of America,” because indeed it is! Granted, “out of touch” feels a bit mild, given that the technologies that Big Tech companies have been pushing out for the past two decades appear to be bringing about the end of America, but if Schmidt-Xu were prepared to acknowledge the horrors of what’s happening and take some responsibility for the harms they’ve caused, well, forgiveness is a virtue.

That, however, is not their theme. Instead, they plea for Silicon Valley denizens to stop worrying about whether AI turns in “artificial general intelligence,” and instead appreciate just how amazing AI is already. The core of their argument is a compare-and-contrast with China, where (they claim) AI is simply being incorporated into daily life. I have no idea if that’s true, but I have to say, the evidence they cite is weird. For example, “in rural villages, competitions among Chinese farmers have been held to improve AI tools for harvest”—what? Uh, how’d that go?

Anyway, then they fired this shot across my bow:

Many of the purported benefits of AGI—in science, education, health care and the like—can already be achieved with the careful refinement and use of powerful existing models. For example, why do we still not have a product that teaches all humans essential, cutting-edge knowledge in their own languages in personalized, gamified ways?

Gee, I dunno! Maybe it’s because what makes humans unique is our ability to learn from one another, and we’d much rather do that than type back and forth with robots? Maybe it’s because teaching requires teachers to develop a theory of mind as to what’s taking place in the minds of their students, and large-language models are devoid of the capacity to do this? Maybe it’s because education technology in general is typically only used by the most cognitively advanced students? Maybe it’s because effortful thinking is hard, and you can’t gamify your way through fundamental challenges to cognition?

Schmidt-Xu then go on to cite smartphones as a “game changing” technology that caused a “revolution…because cheap, adequately capable devices proliferated across the globe, finding their way into the hands of villagers and street vendors.” I confess I myself missed the global smartphone revolution led by villagers and street vendors, as I’ve been a bit preoccupied by the technofascist one led by Silicon Valley billionaires. What’s more, do they have any awareness that schools, school districts, states and even entire countries are banning smartphones from education, having (belatedly) realized that supplying kids with tools of distraction while they sit in class is counterproductive to, you know, trying to teach them?

Does anyone within Schmidt’s empire know a single fucking thing about how education actually works?

I was mulling on that point when a reporter in Louisiana reached out midweek to drive me even further up the wall by alerting me to a resolution that’s aimed at jamming AI down the throats of educators in that state. Details here.

Where to start with the bullshit, where to start. Among other things, the resolution states that the state board of education (BESE) must play a “critical role…in ensuring equitable access to high-quality AI instruction.” I would love for someone at BESE to tell me what “high-quality AI instruction” they have in mind, because there sure isn’t any rigorous research I’ve read to support the existence of such a thing. Likewise, BESE wants to “assist local school systems in evaluating and adopting adaptable, high-quality AI learning solutions”—but what are these so-called solutions? What exactly is the problem that these purported solutions are solving for—that humans exist?

The delicious irony here is that the Louisiana AI resolution is clearly designed to position the state to go after whatever federal education funding trickles out from the Trump Administration’s Executive Order-palooza on AI. I am sure all the conservative education pundits who railed against the Obama and Biden Administrations for “federal overreach” into state education policy will condemn this effort too, because they place principle ahead of naked power grabs, right?

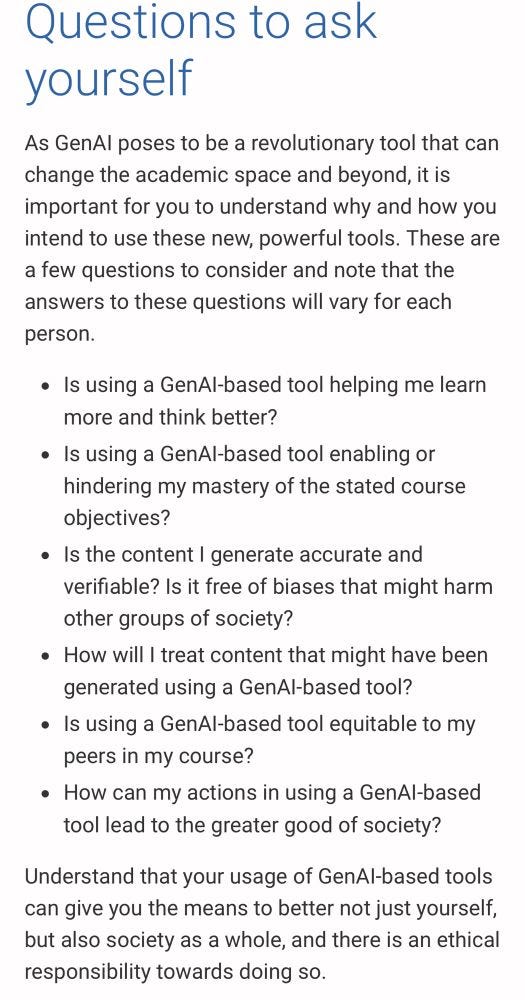

Serenity now, serenity now, I began to chant to myself at this point—only to wake up Thursday morning to find this new AI guidance at the top of my BlueSky timeline, courtesy of the University of Michigan:

I find this so cowardly. You see here Michigan essentially surrendering to students using AI—it’s not whether they should use these tools at all, but rather “why and how.” The listed questions are on their face are unobjectionable, but the answer to the first one—will genAI help students learn more and “think better”?—is surely “no” in 90 to 99.5% of all use cases. Given that, how can administrators who came up with this guidance possibly contend that student use of genAI will better themselves and society? That students in fact have an ethical responsibility to make use of this technology?

With apologies, I must shout again: AI IS A TOOL OF COGNITIVE AUTOMATION! That’s the value proposition! The whole bet here is that these tools will be useful because they can complete cognitive tasks faster and/or better than humans can! Leaving aside whether the Big Tech companies pushing them upon us will actually be able to achieve that goal, we need stop pretending that they presently are doing anything other than acting as a substitute for the effortful thinking that we want students to be undertaking so as to build their own knowledge, in their own heads, in their own minds.

Gah. Is it so much to ask that our institutions of higher education stand up for the fundamentally human act of educating? Or have our temples of knowledge themselves become so corrupted by the poisoned chalice of techno-solutionism that there is no place left to turn? And if so…

Benjamin, thank you for this insightful article. You confirmed one of my greatest concerns: that using AI becomes a replacement for thought.

What then? Especially for the young, whose brains are just beginning to form, who're not at the developmental stage that unfolds the ability to think rationally and grasp abstract concepts?

Time and again, I go back to that philosophical treatise, Jurassic Park, and it's sage, the mathematician Malcolm, who noted (and I paraphrase) "the scientists were so busy seeing if they could they didn't stop to think if they should."

In Alabama cell phones have been banned this year. Students are struggling with withdrawal from their phones. There are appropriate uses of the phones that can't be used because the new rules didn't thoughtfully consider the details of banning phones. It could be that consideration of how or whether we start to embrace AI for all things in all grades in all subjects is a better idea than going forward fast because it's inevitable. Experience is a dear teacher, but fools will have no other.