Searching for Abstract Intelligence

Humans learn the rules of chess but large-language models fake it

If you’re in the AI skepticism business—and boy am I!—one go-to move is to question whether large-language models are capable of forming or understanding “abstractions” in the way that humans can. But what does that mean?

To probe at that question, I’ll start by sharing with you that my daughter, who just turned nine, has recently taken an interest in chess, much to her dad’s delight. I’m not sure when she discovered that her Duolingo app includes a tiny chess engine, but whenever it was, she’s now learned the basic rules of how the pieces move (more complex operations such as castling remain a work in progress). She’s also quick to tell me, “I’m not a prodigy, Dad!”, which is great because (a) neither am I and (b) we’ve been working on improving sportsmanship.

Josh Waitzkin was a chess prodigy, and readers of a certain age may remember his name from the 1993 film Searching for Bobby Fischer. If you’re too young to have seen this movie, fire up ye olde streaming service, because it’s incredible, and has one of the all-time great casts that includes Laurence Fishburne, Ben Kingsley, Joe Mantegna, Joan Allen, William H. Macy, Laura Linney, and an eight-year old Max Pomeranc playing Josh (and doing an extraordinary job of it).1

The core of the film revolves around the emergence of Josh’s chess-playing talent and what it portends for his future. Josh’s father Fred (played by Mantegna) and chess coach Bruce (played by Kingsley) very much want to push Josh to become elite, to become the next Bobby Fischer. But Josh’s mother Bonnie (played by Allen) does not share in their obsession, and she worries—rightly it seems, given how Bobby Fischer turned out in real life—that maniacally focusing on becoming great at this ultimately pointless game will rob Josh of his childhood. Later, Josh’s teacher (played by Linney) raises the same concern, and warns his parents that Josh’s schoolwork and social life is suffering because of his devotion to “this chess thing”:

Incredible scene. But let’s get back to abstractions.

At the start of the movie, we see Josh going to Washington Square Park in New York City and observing “hustlers” playing chess, including the magnetic Vinnie, masterfully portrayed by a young Laurence Fishburne.2 It’s by observing these games, we are told, that Josh learned the basic rules of chess. This is a bit of a Hollywood exaggeration, as it turns out—according to the book by Fred Waitzkin that the movie is based on, Josh’s love for chess did originate with him watching people play in the park, but it was his after-school teacher who explained how the pieces moved. Either way, what’s definitely true is that within one year Josh fully understood the rules and was defeating adult chess players on the regular, including his dad.3

Which is another way of saying that, by age seven, Waitzkin had developed an abstract understanding of chess. Although chess can of course be played using physical pieces on a physical board, Josh was capable of playing entire chess games in his head, following the set of abstract rules that govern gameplay. What’s more, he could also imagine future hypothetical game states based on imagining how combinations of moves might play out—that is, he could image an abstract future.

Perhaps you can see why cognitive scientists are so often enamored with chess, the “sport of thinking” as Fred Waitzkin aptly describes it. In essence, the entire game is premised on creating an abstract picture of a little medieval battlefield, and then further imagining various possibilities that may arise depending on what decisions are made. Viewed this way, chess serves as a microcosm of our intellect.

Now to link this up to AI. A month ago, Gary Marcus wrote a terrific (if long!) essay about cognitive models, which he describes as “internal mental representations” of the world. The idea that humans use such models in order to navigate the world is central to all of cognitive science. But also, befitting the nature of all models, they are incomplete, and “invariably involve abstractions that leave some details out,” as Marcus notes. This is a feature, not a bug, of human cognition—among other things, these abstractions help us to transfer our knowledge to novel situations.

What about large-language models—can they form abstractions? Well, when it comes to chess, thus far the answer is no. For all the claims about generative AI becoming “superintelligent” or “powerfully intelligent” or “ultra mega scary AF intelligent” or whatever the latest claim is…they have not developed an abstract understanding of this relatively simple game. Marcus again:

LLMs have a lot of stored information about chess, scraped from databases of chess games, books about chess, and so on. This allows them to play chess decently well in the opening moves of the game, where moves are very stylized…As the game proceeds, though, you can rely less and less on simply mimicking games in the database. After White’s first move in chess, there are literally only 20 possible board states (4 knight moves and 16 pawn moves), and only a handful of those are particular common; by the midgame there are billion of possibilities. Memorization will work only for a few of these.

So what happens? By the midgame, LLMs often get lost. Not only does the quality of play diminish deeper into the game, but LLMs start to make illegal moves.

This is the crucial point: Humans, even at young ages, are able to infer and understand abstract rules from observation and instruction, and once we develop our abstract understanding of these rules, we will reliably follow them— we won’t just randomly decide to move our rooks diagonally or whatever. In contrast, while large-language models can “memorize” an incredible broad array of chess games in training, they do not reliably follow the rules of chess as the complexity of the game positions increase, and they will start to move illegally.

They just aren’t that smart.

Ironically, of course, many seminal advances in AI have involved chess. Famously, in the mid-90s IBM’s Deep Blue beat world champion Garry Kasparov, leading to a wave of predictions about AI replacing humans across all sorts of cognitively demanding tasks, including teaching—perhaps that sounds familiar? (For more on this, Greg Toppo at The 74 wrote a great look-back piece on IBM’s Watson-related AI tutoring efforts.)

And more recently, the use of “deep learning” techniques have fueled advances in AI chess models such that they completely dominate human performance. In 2017, for example, Google’s DeepMind released AlphaZero, a game-playing AI model that they claimed was able to “self-train” itself on chess and defeat even the most advanced computer chess engines. This, DeepMind argued, was proof that AI models could in essence “learn” cognitively demanding tasks of their own accord.

Once again, Gary Marcus was having none of it. In an interesting paper on innate aspects of cognition, he patiently explained that DeepMind had actually built in quite a bit of human knowledge into the structure of AlphaZero, such as using something called Monte Carlo tree search. The point Marcus made then (and now) is that these AI models were not really learning from the data they were being fed. Instead, they were being given tools to use that allowed them to essentially number-crunch their way to chess greatness. That’s no small feat! But it’s fundamentally different from how humans learn.

You might think we’ve gone as deep into the weeds as we can on this, but think again!

As I was starting to draft this essay, Peli Grietzer on BlueSky pointed me to the DeepMind’s more recent AI chess model, “MuZero,” which came out in 2019. As with AlphaZero, DeepMind claimed to have developed a deep learning methodology that could lead to superhuman performance in chess (as well as Go and old Atari games, amusingly). But, perhaps in response to Marcus’s critiques, DeepMind also boasted that MuZero’s training method “does not require any knowledge of the game rules or environment dynamics, potentially paving the way towards the application of powerful learning and planning methods to a host of real-world domains for which there exists no perfect simulator.” (My emphasis)

I find this baffling. If I’m understanding the claim correctly, DeepMind is saying that MuZero was able to infer the rules of chess without explicit programming. But this makes no conceptual sense. There is nothing inherent (or innate?) about the standard version of how chess is played. In fact, it’s common for chess players to play variants of the game using different rules, so if MuZero was not shown examples of existing games to “learn” from, nor programmed with the rules directly, I literally cannot imagine how it could develop an abstract understanding of chess.

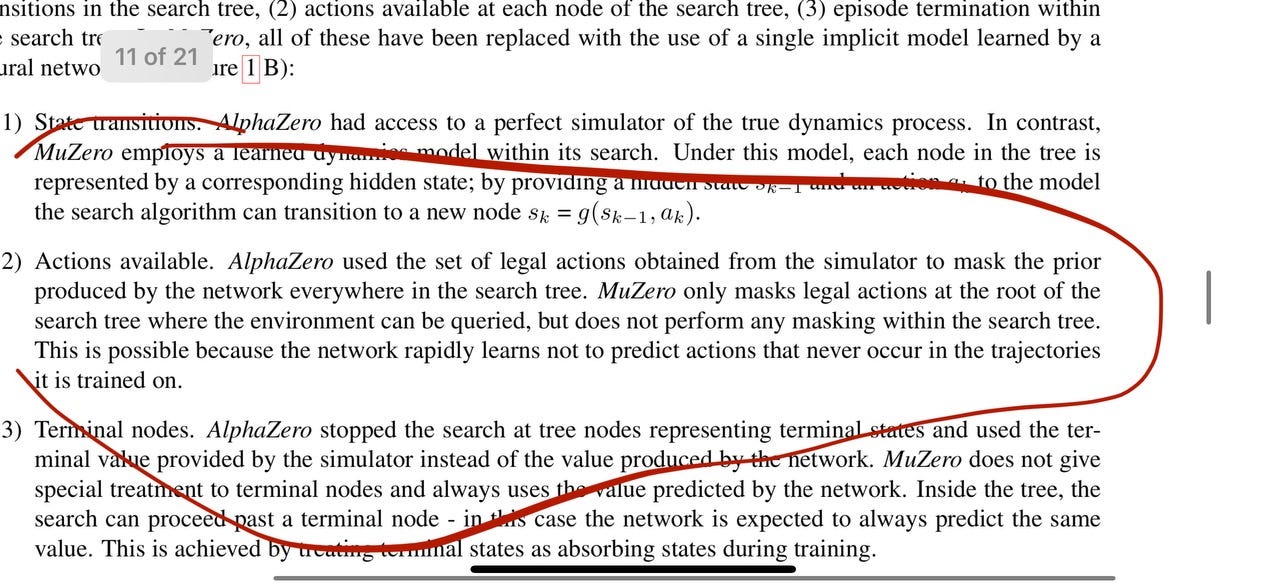

Seeking to sanity check myself, I reached out to Gary Marcus himself to get his take on MuZero, but it’s not something he’s looked into—though as he quickly noted, we haven’t heard much about in the five years since it was released. Good point. I then pinged a cognitive scientist who understands the technical inner workings of AI better than I do, and—initially, at least—they confirmed that it appeared MuZero was indeed directly trained on the rules of chess, based on something they found buried in an appendix:

That’s pretty wonky, so I’d hoped to quote them in more detail but they were nervous about me doing so, even if anonymized, which may say something about the intellectual climate in US academia nowadays. But the point is they affirmed my hunch.

So, here’s the upshot: Despite DeepMind’s claim to the contrary, it seems to me that MuZero did not develop an abstract understanding of the rules of chess by inference. The rules were programmed in. No shame in that, but it underscores the difference between what AI is good at (crunching numbers) versus what we humans remain uniquely effective at doing (thinking abstractly).

Whew. I didn’t intend to write 2,000 words on chess, I swear, but here we are. To close out, in the course of doing research for this post I looked into what Josh Waitzkin is up to nowadays. Turns out he moved on from chess in his early 20s, got into various martial arts (cool), now seems to be into something called foiling (like surfing but with a fin?), has written a book called The Art of Learning (hmm), and has been going on podcasts lately hinting that AI is going to take over the world (dammit man).

And then there’s the 2017 afterword to the book his father wrote, wherein Fred and Josh discuss the impact of the book and movie on their lives, and Josh tells his dad this:

I’ve thought a lot about our relationship in those times. It’s so easy to say I grew up with a sense of conditional love. That was something that I had to work through. But now as 40-year-old man, mostly I feel that the core connection between us was so profound. My demons became our demons. If I lost, you felt pain. You were shattered with me. That is something that runs parallel to a loss of love.

There was a moment I realized I didn’t want to spend my life staring at 64 squares as a metaphor for life. I wanted to look at life directly.

Sometimes we need to flee our own abstractions.

If you haven’t had enough chess—ha!—I’ve become mildly addicted to Daniel King’s game recaps. Here’s one from 2017 where he analyzes the impressive and creative play of AlphaZero against another powerful chess engine (Stockfish):

And as I was readying to hit send on this post, I found Roger Ebert’s review of Searching for Bobby Fischer and it’s simply fantastic. To wit:

Prodigies are found most often in three fields: chess, mathematics and music. All three depend upon an intuitive grasp of complex relationships. None depends on social skills, maturity, or insights into human relationships. A child who is a genius at chess can look at a board and see a universe that is invisible to the wisest adult.

This is both a blessing and a curse. There is a beauty to the gift, but it does not necessarily lead to greater happiness in life as a whole.

At the end, it all comes down to that choice faced by the young player that A. S. Byatt writes about: the choice between truth and beauty. What makes us men is that we can think logically. What makes us human is that we sometimes choose not to.

Now go watch the movie!

According to Wikipedia, “at the time Pomeranc appeared in the film, he was also one of the U.S.'s top 20 chess players in his age group.” He made two other movies as a kid and then quit acting. He now appears to work as the director of communications…for chess.com.

In this excellent Rewatchables podcast review of Searching, the hosts briefly discuss the implicit racism in the movie, insofar as Vinnie and all the other Black chess players are portrayed as selling or consuming drugs. It mars an otherwise beautiful film.

I also learned that from age three onward, Josh referred to his father as “Fred” instead of “Dad.” My daughter tried this with me too and I shut that down right quick.

What a wonderful piece! What sticks with me is this line you quote from Josh Waitzkin's book: "My demons became our demons. If I lost, you felt pain. You were shattered with me. That is something that runs parallel to a loss of love." I take that to mean that they felt pain together in the absence, or loss, of love. There was no love anymore because success had become the accomplishment in chess. That's what the teacher is trying to point out in the movie clip. Perhaps that's a simplistic way to see the larger point of your essay; a rules-based way to see the world is only part of the full experience. And a neural network cannot really convey such an experience, even though its chatbot is very good at pretending it can.

There is an even better game for cognitive scientists - 20 Questions! I discuss what lessons it can teach here - https://alexandernaumenko.substack.com/p/intelligence-and-language