My plan this week was to write about an interesting new study from the Santa Fe Institute that explores whether the most advanced LLMs are improving on complex reasoning tasks for the wrong reasons, but then my friend Dave Karpf wrote about OpenAI as Enron and linked to my essay from January on this same topic, leading to a slew of new followers (welcome, everyone). He then goaded me on BlueSky to write even more about OpenAI shadiness, so here we are.

Ten months ago, I made a straightforward series of observations:

OpenAI’s CEO Sam Altman was claiming that the creation of “artificial general intelligence”—something as smart as humans—was a solved problem, and that OpenAI was already moving on to creating superintelligence.

At the same time, there were multiple stories in major journalistic outlets suggesting that the training for OpenAI’s next flagship model, ChatGPT5, was not going well, and that OpenAI was only getting modest performance improvement from the addition of more data and computing power to their existing product.

These two facts were in obvious tension with each other, as academics like to say. They could not both be true.

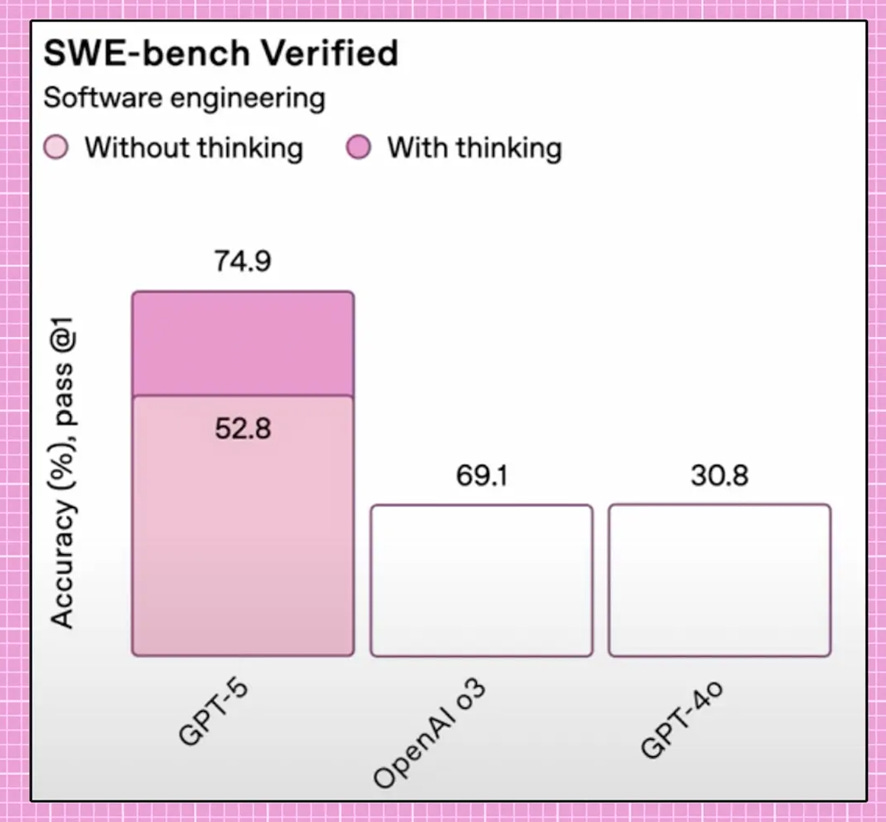

So, now it’s mid-October 2025, and we know for certain what many of us suspected back in January: Sam Altman was full of shit. We know this because in August, OpenAI finally released ChatGPT5 and…it was a giant meh. The new model was not and is not artificially general intelligent in any way, shape, or form, much less “superintelligent.” That jump in capabilities that people experienced from GPT3 to GPT4? Did not happen. And even the most enthusiastic AI boosters admitted the rollout was lame—might I point you again to this unintelligible AI-created “graph” that OpenAI used at the time of GPT5’s launch?

Yet, despite the (ahem) abundantly clear slowdown in model performance, Altman continues to double down on promises of our glorious AI future, and that OpenAI is really and truly building an economic Death Star that will obliterate all other technology companies before upending every aspect of life as we know it. The stories are intensifying in their absurdity. Build a Dyson’s Sphere around our solar system? Sure, yes. Raise $10 trillion to cover the Earth in data centers? Of course, who could object, everything in the entire economy must be in service of improving the chatbots and funny video generators.

It’s all so ludicrous. And, tragically, it’s driven poor Ed Zitron insane. But more cracks are starting to appear even among the AI devout. To wit: Last week Ben Thompson in Stratechery, he who gushes about AI regularly, wrote “we’ve obviously crossed the line into bubble territory, which always was inevitable.” Oh, ok then! Meanwhile Matt Levine, who keeps an admirable even keel, observes that OpenAI is announcing deal after deal that’s premised on the announcement of the deal itself being the very thing that finances the deal, leading to this masterful paragraph:

The financing tool is, you go to Broadcom and you put your arm around their shoulder and you gesture sweepingly in the distance and whisper “omniscient robots” and they whisper “yesssss” and you say “we’ll need a few hundred billions dollars of chips and equipment from you” and they say “of course” and you say “good” and they say “do you have hundreds of billions of dollars” and you whisper “omniscient robots” again and they are enlightened. And then you announce the deal and Broadcom’s stock adds $150 billion of market capitalization and you’re like “see” and they’re like “yes” and you’re like “omniscient robots” and they’re like “I know right.” That is the financing tool! In some loose postmodern sense, OpenAI has borrowed hundreds of billions of dollars from Broadcom. You can buy hundreds of billions of dollars of equipment to build the robots to sell for money to pay for the equipment, because you’ve gotten everyone to believe.

Because you’ve gotten everyone to believe. Again I think back to my Enron experience, when I was surrounded by smart-if-evil Ivy League trained investment bankers who simply could not fathom that anyone would question the ability of this high flying company to spin deregulated power grids into gold. Enron got everyone to believe. But the veil eventually was pierced, and the collapse was swift.

Will this happen to OpenAI? Somewhere Zitron screams, YES, IT MUST, OH GOD HOW CAN IT NOT, PLEASE RELEASE ME FROM MY HELL. But on the other hand, look at Tesla, a relatively small car company that somehow is worth more than the entire remaining automative industry combined, with a neo-Nazi owner to boot. When I was studying business we thought markets were mostly rational but if you still believe that today, whoo-boy look around.

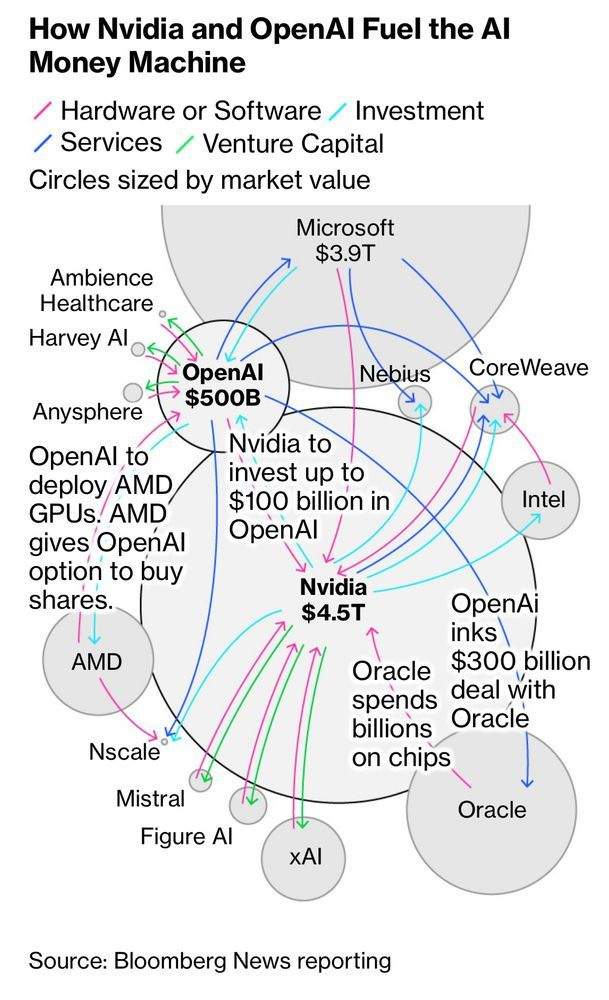

Here’s a good Rorschach test, look at this graphic and tell me what you see:

When I look at this, I see a disturbing web of incestuous financial relationships that suggests the entire AI industry is resting on a precarious foundation that may collapse like a wobbly Jenga tower if one brick is pulled too quickly. But you might just as easily think, “huh it seems like the entire technology sector is now wedded to each other, and this creates a nice set of interlocking supports, like NATO but for the economy as a whole.” A bubble, yes, but everyone’s bubble. Of course, we might then remember that the entire global economy did crash in 2008 when Lehman Brothers went bankrupt after everyone suddenly realized that the subprime lending bubble that we’d been ignoring was, uh, no longer something that could be ignored.

Surely no lesson to take away from that history, right? No reason to worry. Omniscient robots, coming soon!

Once last time: There are massive protests planned across the US this Saturday, October 18. Please stand up, speak out, and fight back. Dressing up as a frog is optional.

Actually spit out my coffee when I got to the "It’s all so ludicrious. And, tragically, it’s driven poor Ed Zitron insane" line.

Is this really the only way for you to view my work?