Benchmarking the pedagogical unknown

On the improbability of using empirical measures to mitigate AI-in-education acid trips

Recently, Ethan Mollick, the Wharton business school professor who somewhat improbably has become one of the most prominent “AI influencers” in education, posted this call to action on BlueSky:

This led my thoughtful friend Cara Jackson to ask whether anyone was or is trying to create such benchmarks in education. Short answer—maybe? Mollick has pointed to one such effort that I’ll circle back to shortly, and some of his followers said they are pursuing projects in this direction. Likewise, Bellwether Education Partners (where I also have friends), recently suggested this could be a worthy pursuit:

To enhance the quality of AI outputs, there is an opportunity to develop more high-quality, education-specific datasets for fine-tuning AI models for tailored uses. Additionally, establishing education benchmarks for AI tools can incentivize and align the market around quality and effectiveness.

It’s the holiday season. Time for good cheer, mirth and merriment. So, please forgive me for delivering a lump of coal in the education sector’s collective stocking when I say any effort to develop AI-in-education benchmarks is almost certain to be a colossal waste of time and money.

To fully understand why, well, please get in touch to host a Cognitive Resonance workshop in 2025—they are designed to help participants build mental models of how AI works, and see how this technology compares to human minds. But for now, and as an early holiday present to the Cognitive Resonance Substack community, I’ll briefly touch on two big problems.

The first involves understanding what large-language models are designed to do. You may have heard that LLMs “predict the next word,” but…predict the next word of what? This, as it turns out, is a very interesting and complicated question, and I highly recommend this article from Colin Fraser, a data scientist at Meta, to explore it properly. It’s long but I promise it’s worth it. For present purposes, however, here’s the key insight (quote slightly edited for brevity and emphasis):

An LLM is trained to fill in the missing word at the end of a pre-existing sentence. But when we deploy an LLM as a chatbot for the world to use, we switch from using it to guess the next word in a pre-existing sentence to “guessing” the next word in a brand new string that does not actually exist. This is an enormous switch. It means that there is simply no way to evaluate the accuracy of the LLM output in the traditional way, because there are no correct labels to compare it to.

When you feed ChatGPT the string “What is 2 + 2?”, there is no such single unambiguous correct next word. You’d like the next word to be something like “4”. But “2” could also be good, as in “2 + 2 = 4”. “The” could also be a good next word, as in “The sum of 2 and 2 is 4.” Of course, any of these could also be the first word in a bad response, like “4.5” or “2 + 2 = 5.” The task that the model is built to do is to fill in the word that has been censored from an existing passage—a task which does have an unambiguous right answer—but now the situation is entirely different. There are better next words and worse next words, but there’s no right next word in the same sense as there was in training, because there’s no example to reconstruct.

I hope that makes your head spin. It should make your head spin. The output of an LLM is a prediction about what text should exist when there's no clear way to delineate in advance what defines what "should exist." In essence, LLMs are predicting what text to produce against an empty backdrop. So-called hallucinations do not result from the models malfucntioning or being trained on the “wrong” data. They result when we humans decide that what the models produce isn’t true according to our understanding of the world.

What’s more, paradoxically and counterintuitively, this problem doesn’t go away by training models on “better data.” In fact, as I’ve written previously, LLM performance can improve with “fuzzier” data, my super-technical term for information that is wrong but useful for LLM training because it broadens the scope of what’s “in distribution” to it, meaning, things it has seen before it’s deployed.

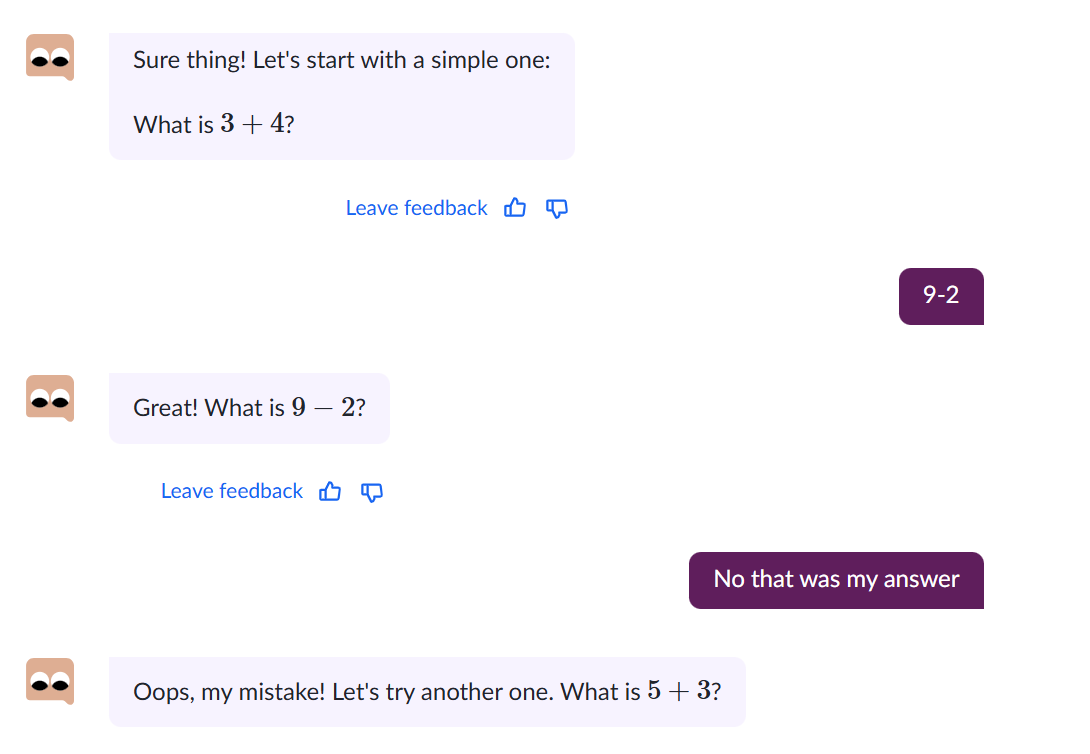

This is particularly mind-bending to think about in the context of pedagogical interactions. To continue running with Fraser’s example, imagine if an elementary age student using Khanmigo was asked to answer “3+4 = ___?” and they typed in “9-2”. I submit to you that many elementary math teachers would be thrilled to see this sort of response from one of their students, as it would indicate the student understood conceptually what equations represent. Khanmigo, on the other hand, is completely unaware and unintereseted of what might be happening in such a moment:

Oops!

So that’s the first big problem. But I can anticipate the counter already: “Well Ben, maybe that’s true today, but as a practical matter we can ‘fine tune’ these models so that their output generally aligns with what we consider true. We don’t need it to be perfect, just good enough.”

This brings me to the second big problem. Teaching, at its core, involves a human teacher taking an action to foster a change in the mental state of their students so they develop new knowledge. As every teacher has experienced too many times to count, the path of learning for every student varies in ways that are unpredictable, delightful, infuriating, and everything in between. Along the way, student thinking can be made partly visible through various activities—but only partially so, given that we can never fully see inside their minds (or anyone else’s, for that matter). As such, teachers—relying on their experience, their training, their own knowledge, and whatever else is handy—make thousands of choices each and every day against a backdrop of novelty and uncertainty. And, I’m sad to report, there is no way to definitively determine whether any of those individual choices are right or wrong. You can’t “benchmark” against the unknown.

This brings me back to Mollick and whether we might benchmark our way to improved AI applications in education. On BlueSky, he suggested that people look for inspiration from this hot mess of a paper titled, “Toward Responsible Development of Generative AI for Education: An Evaluation-Driven Approach,” which is also the source of the graphic at the top of this essay. That weird blue curvature of space-time is the funniest thing I found in a research article this year. What is it supposed to represent, you ask? Why, it’s a hypothetical “complex manifold lying within a high-dimensional space of all possible learning contexts (e.g. subject type, learner preferences) and pedagogical strategies and interventions (some of which may only be available in certain contexts). Only small parts of this manifold may be considered as optimal pedagogy, and such areas are hard to discover due to the complexity of the search space.”

Translated, this means “teaching is hard”—and indeed it is. The dream of the chatbot tutor crowd is that we’ll somehow figure out how to encapsulate the “complex manifold” of all pedagogical decisions, train AI upon it, and then BOOM, two sigma learning gains, or whatever. To which I say: Good luck navigating the complexity of that search space.1

And I also say, happy holidays! There’s at least one more post coming before the end of the year for you Cognitive Resonance die-hards—and if you wanted to give me an early Christmas present, I’d be grateful if you’d share this newsletter through your channels as I continue to grow readership.

Thanks for being part of this community.

Just so there’s no confusion down the road, I am not arguing we should forego the empirical testing of the use of LLMs in education—far from it. In fact I’m confident that as such studies roll in, they’ll help to let air out of the AI hype balloons. What I am saying is that creating some sort of leaderboard for AI educational tools is not going to meaningfully improve the pedagogical performance of such tools.

I think you're right on about the second problem. Generative AI is good, at least in a limited sense, at producing superficially plausible simulations of generic texts: "Make me *a* [whatever]." What it's not good at is responding to specific situations in a grounded and purposeful way: "Make me *this* [whatever]." As you suggest, effective teaching requires grounded, purposeful response to specific students in specific situations, and gen AI can't really get there - it can't make the leap from plausible(ish) to purposeful.

(And this is even setting aside the embodied aspects of teaching and learning as a specifically human interaction.)

Agreed, teaching is hard, and developing benchmarks upon which to judge an AI tutor’s use of the disparate methods and techniques at a teacher’s disposal is quixotic and a fundamental misunderstanding of both AI and Education. Teaching, as you rightly point out, occurs in novel ecosystems—unique mixes of learner needs, behaviors, social dynamics, prior knowledge, teaching style, etc.

The confounds are seemingly endless.

My question is whether we may take narrowly defined teaching methods, a Worked Example for instance, and come to understand or generate the optimal base structure of it?

That is, don’t ask an AI to tutor a student, but perhaps it can give you a great approximation of what the average version of X teaching method or learning object is given the context provided. It won’t put all the elements together and properly apply methods (hell expert teachers don’t always get this right. There is no instructional methods checklist to follow) but perhaps the narrowly defined approximation of a single method embued with context is an excellent building block for the novice teacher.