AI is Stone Soup

An old tale with modern relevance

It’s come to my attention that many of you are unfamiliar with the folktale and quasi-socialist parable of Stone Soup. So today we’re going to remedy that, and in so doing perhaps develop a new way to think about AI and what it really represents. Our source text is the version that was written and illustrated by Marcia Brown, the same version that was read to a young Ben Riley at some point in his tenure at Minnesauke Elementary School.

It goes like this:

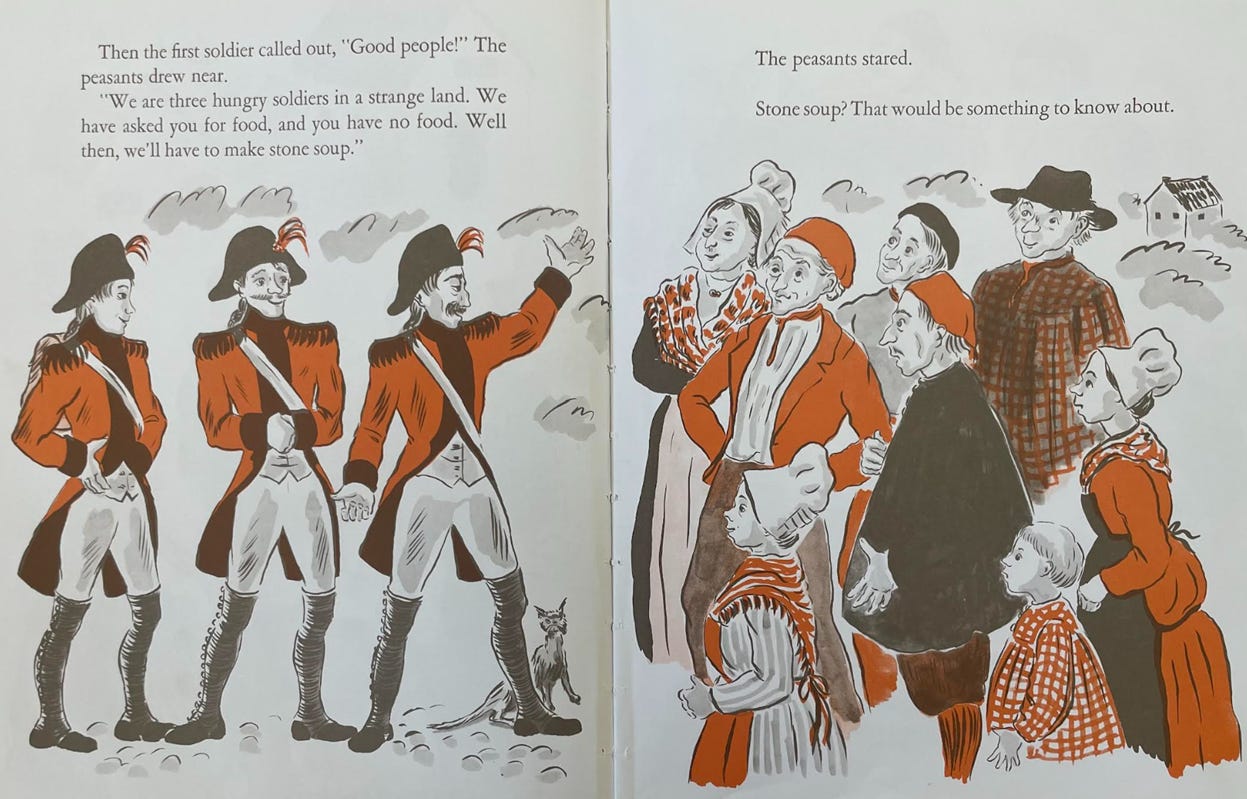

Three soldiers of unknown nationality, “on their way home from the wars,” are hungry, tired, and destitute. They enter a small and impoverished village and ask the locals if they might spare some food. The locals, being impoverished, promptly tell this small platoon of unwanted occupiers that no, they do not have any food to share with them, and that they should f*** right off. (Not a direct quote.)

So the soldiers get creative.

Befitting the name of this folktale, the soldiers begin to boil some water to make some soup. Using some stones. The idiotic curious peasants, perhaps moved by their latent sense of European egalitarianism, suddenly find that they do in fact have some spare carrots, cabbages, potatoes, barley, onions, chicken and beef that they can each add to “flavor” the soup. (They also inexplicably add buttermilk, which seems like a disastrous culinary move for a soup with a water base.)

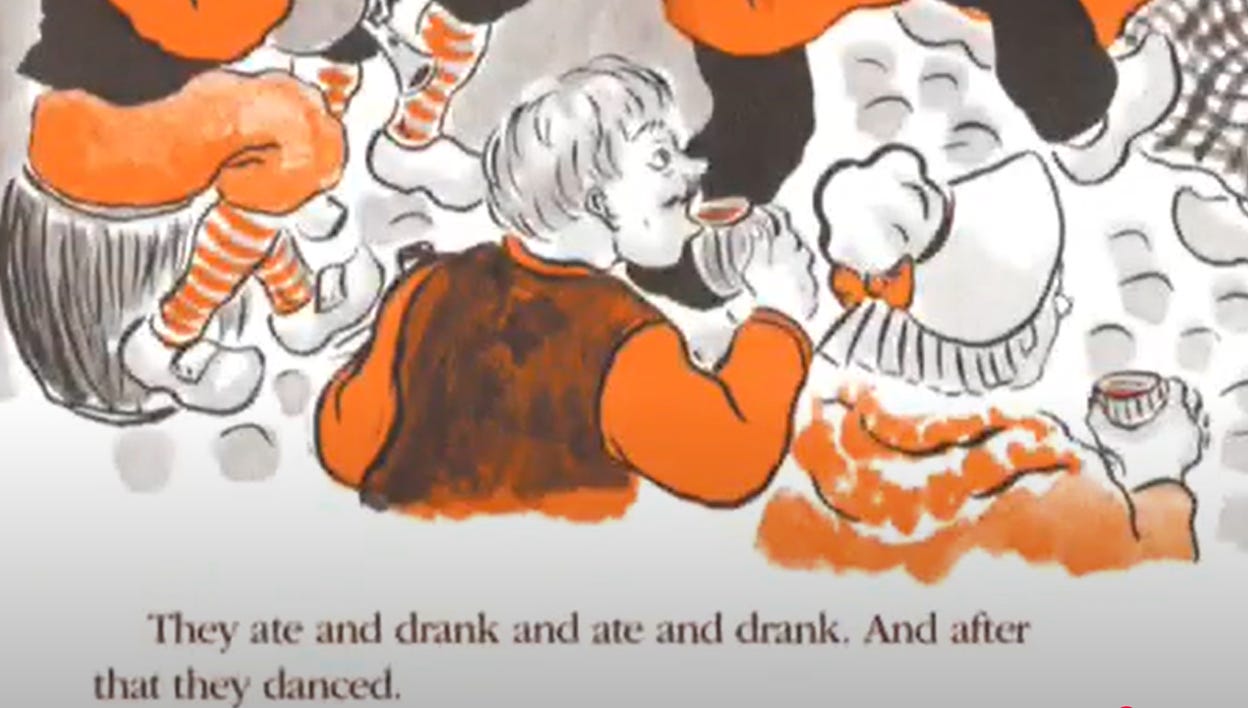

The soup smells good and now the villagers feel collectively invested in its creation—this is how we eventually got Karl Marx—so they host a big feast. The stone soup, it is delicious. And, in a plot twist I did not remember from Ms. Wasserman’s 2nd grade reading, an 18th century-style rave ensues thereafter, and everyone gets very drunk.

After passing out, the soldiers quickly and wisely make their getaway the next morning, but not before offering a cryptic-yet-smug goodbye to the clueless gullible peasants they just partied with:

My point with all this, of course, is that children’s stories written in the 1970s from a Marxist-Leninist perspective are profoundly surreal. No no, my obvious point is that AI is the modern-day equivalent of stone soup, a technology that only exists, and provides whatever value it provides, because of the very human knowledge and labor that makes it what it is—including a great deal of knowledge and labor that, as many have observed, has simply been expropriated for private gain without compensation to human creators.

Credit for this idea goes to Alison Gopnik, a cognitive scientist at UC Berkeley, who’s been invoking the AI-as-stone-soup metaphor for some time. Here’s how she retells the tale with AI in focus:

Some tech execs came to the village of computer users and said, “We have a magic algorithm that will make artificial general intelligence from just gradient descent, next-token prediction, and transformers.”

“Really,” said the users, “that does sound magical.”

“Of course, it will be even better and more intelligent if we add more data — especially text and images,” said the execs.

“That sounds good,” said the users. “We have some extra texts and images we created stashed away on the internet — we could put those in.”

“Great,” said the tech execs. “But, you know, this version still says a lot of dumb things. It would be even more intelligent if we could get humans to train it directly with reinforcement learning from human feedback.”

“Sure,” said the users. “There are whole villages in Kenya that will jump at the chance.”

“OK,” said the execs, “now the last thing that would really make it royally intelligent is for the users to do clever prompt engineering to ask it just the right questions in the right way.”

“Sure,” said the users, “we will think hard and figure out how to prompt it.”

“Good,” said the tech execs, “this will be a truly, magically intelligent machine.”

“And to think,” said the users as they shelled out for the latest version, “how magical that this artificial general intelligence was made from just a few algorithms!”

And then, presumably, the tech execs and the users throw a giant cuddle party where everyone takes ketamine and forms polycules. Gotta modernize the story for 2025.

Gopnik invokes this metaphor with respect to AI generally, but we can find AI-as-Stone-Soup in education specifically. Just last week, for example, I referenced flaws in a World Bank project involving a six-week “AI tutoring” initiative in Nigeria, at least as described in blog posts from the lead researcher. Just as I was hitting send on my essay, the formal write-up of the study dropped and, well, get ready to taste some stones.

As appetizer, please go read Michael Pershan’s excellent summary of what this study actually entailed. No really, click the link, I promise it’s worth your time. Among other things Pershan affirms the general methodological issues I previously flagged in my blog-post review. But, more relevant to my thesis here, he also offers sharp insights into the pedagogical activity that was actually taking place in these African schools:

The researchers told kids to prompt the model to act as a “well-seasoned English grammar tutor.” They instructed the model to “reply to my questions in a motivational and engaging tone.” The model was told to ask questions or propose exercises on the grammar topic of the day, to provide hints or corrective feedback when needed.

OK, fine. But is this tutoring?

I mean…not really? It’s just interacting with an LLM, which is a really cool dynamic text generator, but isn’t acting as a teacher. ChatGPT didn’t decide what students were ready to learn. It didn’t create the lesson. It didn’t even do all the instruction: “At the end of each session, the students were encouraged to reflect and discuss lessons learned and challenges encountered during session to facilitate knowledge sharing among the group,” the researchers write. That sounds like teaching! The human is doing it.

This is not school with a robot teacher. It’s more like when I tell my own students to take out Chromebooks and use a math practice app like Deltamath—except that in this case it’s an LLM, which is functioning like a magic, dynamic exercise book.

The world seems set on calling this sort of thing a “tutor” but I think that’s absurd. This is not a tutor. If you insist, call it AI-driven practice software.

Pershan is not alone in thinking this way. In fact, a group of scholars observed that when it comes to this particular education intervention in Nigeria, it’s best to think of it as “’computer assisted instruction’ integrated into teachers’ instruction and curriculum” (my emphasis), rather than as “’computer-assisted learning,’ which operates independently.” By scholars I mean the very same researchers who conducted this study, and who dropped this doozy of a disclaimer into a footnote on page 21.

Now for a few other questions:

Did you know that the these after-school English lessons were explicitly tied to classes the students had taken earlier in their regular school day? That, in essence, this was 90 minutes of add-on instruction to concepts the human teachers had already taught? Does that change your perspective at all on the role of AI in this project?

Do you find it as hilariously amusing as I do that, for all the supposed benefits of this groundbreaking digital technology, both teachers and students were given printout copies of “session guides” with suggested prompts they could type into the LLM? Does it occur to anyone that having preprinted questions and answers is what we also call “a textbook”?

Do you think any of the Nigerian students wondered why they were given guides that included AI-generated pictures of students that appear stereotypically gendered? And do you think they wondered why books are floating next to the boy robot?

Did you know that, in addition to the teachers guiding students in how to use the LLM, the World Bank also trained and provided human “monitors” to “oversee the administration of the assessments and ensure compliance with testing protocols,” and further tasked with ensuring “that the intervention was carried out as intended in each school” and “responding promptly to any challenges”? Do you find it at least possibly relevant to the success of this project that the adult-student ratio for this after-school instruction was 12:1? Does any of this sound easily scalable to you?

Do you find it at least slightly troubling that the World Bank felt compelled to issue a de facto pro-AI propaganda video in support of this effort that makes use of an avatar of a Black woman with an English accent speaking in a flat monotone and sharing dialogue that appears AI generated? Do you perhaps smell the whiffs of cultural imperialism here?1

Do you wonder how this entire project was funded by the Mastercard Foundation, given that no one has used a Mastercard since the 1970s?

If there’s any good news in all this, it’s that noted AI-in-education influencer Ethan Mollick offered a nuanced, measured take on the results of the Nigerian study, and refrained from highlighting the most spurious and speculative aspect of their reported results, the one where they simply multiplied the effect they found from a six-week intervention out to a full year and called it good.

Oh who am I kidding, of course he did the very opposite:

Remember, the Stone Soup soldiers were assholes. And so too are those who rush to overhype AI in education while diminishing the value of human labor, and the value of human teaching.

Such men do not grow on every bush!

CORRECTION: Although the copy of Stone Soup I found in my local library listed 1975 as the year it was copyrighted, I’m informed by my friend Dan Willingham the book was actually written in 1947 (and Wikipedia agrees). I’ve modified the sentence that made reference to 1970s childrens’ books as surreal to instead attribute said surrealism to Marxist-Leninism.

Anand Sharma, an ed-tech entrepreneur based in India, had a strong adverse reaction to this study last week when it was shared on a listserv we’re both part of. With his permission, I’m quoting concerns he raised in our subsequent email correspondence regarding the World Bank’s education efforts:

“I don't doubt the relevance of item response theory or p-value in education research. The real problem is in putting these on steroids to reduce and explain every damn ed system of important concern. The end result is the commodification of learning outcomes in favour of what can be measured easily, and scaled cheaply (the cost effectiveness analysis nonsense), instead of what matters for long-term development of the child.

My views on how this perceived nature of ed R&D in my country relates to AI are still evolving - but the signs of things getting worse are already out; that World Bank study I tore into will sadly be celebrated and pushed down the throat of policymakers.”

I used to read the animal version of “stone soup” by Anaïs Vaugelade to my kids. Instead of 3 soldiers a wolf was responsible for the stone soup and the other animals happily got the ingredients. A very fitting analogy… Hilarious! Thanks for your newsletter! Best regards from Germany

Step 1) get people to buy in through hype, fomo and propaganda 2) undermine their confidence in the value of the old ways 3) alienate them from use of the old ways for long enough that they couldn't go back even of they wanted to... Stone soup's ready everyone... Great piece!