Adopt the adversarial attitude toward AI

It's not cherry picking to focus on the failures of large-language models

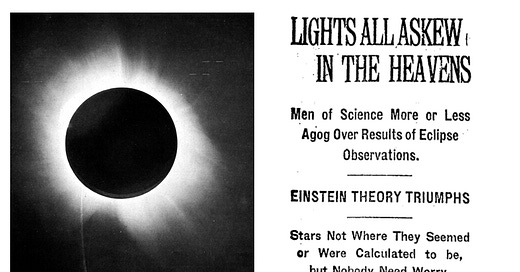

It’s one of the most epic moments in scientific history and it happened in 1919. Albert Einstein, who you’ve heard of, had developed his theory of general relativity, which you’ve also heard of, and predicted that during a total solar eclipse the observable positions of some stars would be shifted due to the gravitational force of the Sun. Importantly, this prognostication arose from general relativity’s conception of space-time as curved; in the world of flat Newtonian physics, there would be no such shifts. So scientific teams were dispatched teams to Africa and Brazil to take set up telescopes and take pictures and measurements and lo, the observations confirmed what this new theory had predicted—the heavens moved. Einstein became famous overnight, and the scientific paradigm for our understanding of our world shifted dramatically.

Now imagine that you, for some unknown reason, wanted to revive the classical Newtonian model of the universe—how would you do it? Well who knows, but one thing that definitely wouldn’t work would be to point to all the many centuries of humanity successfully using Newton’s basic model of the world as somehow relevant to your case. “If it disagrees with experiment, it’s wrong,” the physicist Richard Feynman said, “and in that simple statement is the key to science.” When Newton’s laws disagreed with experiment, they were proven wrong, no matter how useful they had proven to be (and frankly still prove to be) in various contexts.

Ok, so what does any of this have to do with AI? Recently, there’s been a small wave of “critiques of AI criticism” articles that contend AI skeptics are being just a little too hard on poor ol’ AI models. For example, Harry Law at the University of Cambridge (and formerly of Google DeepMind), has one titled—tellingly—“Academics are kidding themselves about AI.” On the whole, it’s unobjectionable, in fact he offers a number of very reasonable suggestions on how to improve AI research, such as “stay current” with the latest models, “embrace humility” about their complexities, and “be creative” in developing new conceptual tools to understand them. Yes, agreed, no argument here.

But then there’s this:

Cherry-picking: Only citing failures while ignoring successes or dismissing benchmarks that contradict your thesis sounds more like advocacy than scholarship. Intellectual honesty means engaging with the full empirical record, especially the parts that surprise you.

On its face, this too seems inoffensive—who could possibly argue for cherry-picking data?—but I think there’s an interesting mistake happening here. Law’s suggested approach for evaluating AI models is exactly what we might expect from, well, someone who works (or worked) in the AI industry and is interested in assessing the overall capabilities of AI models. That’s a perfectly reasonable thing to care about, as far as it goes, but that’s not the role of science.

Let me unpack that claim. When AI enthusiasts argue that AI models are already “artificially general intelligent” in the way that humans are, they are making a claim about the world as it is today. Similarly, when AI enthusiasts suggest that these models will soon surpass human intelligence, that is a prediction about our future. Either way, however, scientists and researchers who doubt the truth of these claims and predictions are under no obligation to undertake some sort of utilitarian calculation to adjudicate their merits. None, zero, zilch. It’s akin to suggesting that Einstein was somehow obligated to “engage with the full empirical record” of Newtonian physics when articulating his theory of general relativity (see, there’s the tie-in).

Instead, when AI skeptical scholars point to examples AI models failing at cognitively challenging tasks, whether it’s chess or math Olympiad problems or the tower of Hanoi or whatever, we see that as sufficient to puncture the grandiose claims of AI enthusiasts who claim we are on the verge of superintelligent robot overlords. And that’s all we have to do. This isn’t “cherry-picking” the data. It’s falsifying the nonsense theories advanced by AI enthusiasts, including some ostensibly in the research community (cough Ethan Mollick cough). Once again: If it disagrees with experiment, it’s wrong. Neuroscientist Paul Cisek said it here first.

That said, I do think we will improve understanding of what generative AI models are doing through more rigorous analysis of their capabilities. To that end, Melanie Mitchell recently pointed to this interesting paper titled “Principles of Animal Cognition to Improve LLM Evaluations,” wherein a group of researchers, drawing from techniques developed in assessing the intelligence of non-human animals, offer five principles to guide future research on the “intelligence” of AI models. Here’s the list:

Design control conditions with an adversarial attitude

Establish robustness to variations in stimuli

Analyze failure types, moving beyond a success and failure dichotomy

Clarify differences between mechanism and behavior

Meet the organism (or, more broadly, intelligent system) where it is, while noting systemic limitations

I like all of these, but it’s that first one I want to focus on here. The authors explain:

Design control conditions with an adversarial attitude. Within animal cognition research, it is common for a single study to be accompanied by multiple follow-ups, each controlling for an alternative explanation; often, experiments are directly or conceptually replicated across many different labs after publication to test robustness and probe plausible alternative explanations. This adversarial approach––in which authors take it upon themselves to propose alternative explanations to their theories and then design and run studies to specifically test them––enables stronger inferences about the mechanisms underpinning a given behavior.

Yeah! That’s what I suspect most AI skeptics and AI critics in the research community want to see really, an ethos of trying to disprove that these tools are sentient. AI researchers should be their own best skeptics. Thus, by using the same principles that animal-cognition researchers use to investigate LLMs, we can “go beyond simplistic binary statements of whether LLMs ‘do’ or ‘don’t’ exhibit advanced concept learning and reasoning capabilities” to instead “conduct in-depth, nuanced analyses of the range of behavior these models exhibit—and surface the capacities they may (or may not) possess.”

Sounds good to me. And with that…I’m out! Not for good, settle down, I’m just taking a week or two off from this newsletter—my summer break. In the meantime, please consider (re)reading Frederick Douglass’s masterful speech, What to the Slave is the Fourth of July?. It’s an enduring reminder both of the promise of American ideals, and our ongoing failure to achieve them.

Thank you for writing this. I recently designed and conducted my first DIY AI experiment, and the process reminded me that the framework of the scientific method is to disprove one's own beliefs. All that pre-work of theorizing and researching is meant to lead up to the experiment, and the experiment should be designed to independently prove whether or not that theorizing holds up in the real world. It is so fundamentally absurd that AI evangelists follow the inverse of this, it's mind-boggling to even begin correcting their logic. Definitely printing this one out to come back to when I need a sanity check to pull myself out of the churn ...

I just wrote a long note on the affect heuristic, a bias that I think is pretty prevalent in AI discourse. I’m not necessarily accusing anyone in particular of anything, I just think it’s good to keep in mind. I’m just gonna paste it here:

“The psychologist Paul Slovic has found that when people have positive feelings toward a technology, they tend to list a lot of reasons in favor of it and few against it. When they have negative feelings toward a technology, they list a lot of risks and very few positives. What’s interesting about this is that when graphed, these results show an incredibly strong inverse correlation, even though this doesn’t make sense for how technologies work in the real world. Many technologies are high risk AND high reward, and many things are low risk AND low reward.

To show the strength of the underlying bias, Slovic took the group of people who disliked a technology and showed them a strong list of benefits from that technology. He found that after doing so, people began to downplay the significance of the risks of using that technology, even though their knowledge of the risks stayed the same. If they were acting fully rationally, their assessments of the risk should have stayed the same. Our minds are not designed to hold incredibly nuanced understandings of things.

I feel like I see this happening in AI discourse sometimes. People who are pro AI might downplay environmental costs and the dangers of piracy. People who are anti AI might dislike it because of its effects on the art industry, but they also might refuse to ask GPT questions because of the “environmental impact,” despite the fact that conversations with chatbots only take up 1-3% of the total electricity cost of AI, and streaming Spotify and YouTube for a non-insignificant amount of time are both much more environmentally costly than talking to GPT. In both cases, people create inaccurate assessments of the risks and benefits of using AI.

The takeaway from this note is to understand your biases and be careful about how you evaluate things. Even if something might have strong negatives, it also might have strong positives, and unless you’re careful, you might be evaluating things based on emotion, rather than reason.

(A summary of Slovic’s work here is in Thinking, Fast and Slow, by Daniel Kahneman, which is where I found it. The name of this bias is the affect heuristic.)”